Byte-Sized Lesson: 4K60 and the Network

A daily selection of features, industry news, and analysis for AV/IT professionals. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

The AV industry is buzzing about 4K video, particularly 4K60. Some are arguing that 4K60 requires a 10 Gps network. Others are saying that isn’t true. In this lesson, I will provide general background information that will hopefully assist you as you analyze these claims. However, let it be noted that new systems should be adequately vetted and my comments are made within a provisional framework.

First, my analysis will be from the IT perspective. IT is generally responsible for an organization's network, therefore, IT managers and teams are concerned with the bandwidth requirements of every application. Second, I’ll not discuss latency except to point out a few obvious observations. In my opinion, the term "zero latency" is overused and ill-defined. From the IT viewpoint, the latency that the Ethernet network introduces is very small compared to other factors in the glass-to-glass latency.

We need to better understand the term 4K60 in order to make our assessment. In 4K video, there are five main factors that determine the bit rate:

(1) resolution

(2) frame rate

(3) bit depth

(4) chroma subsampling method

A daily selection of features, industry news, and analysis for AV/IT professionals. Sign up below.

(5) compression method used

4K has twice the horizontal and twice the vertical resolution of 1080 HD. That alone multiplies the bit rate by four. The frame rate is usually 30 or 60. Here we’ll assume the latter since it is the rate most often discussed. The bit depth is generally 8 or 10 (the number of bits used to represent the pixel color or brightness.) Chroma subsampling is a little complicated but has to do with whether all the pixels or a portion of them are used to represent the scene. The levels are nearly always 4:4:4, 4:2:2, or 4:2:0. The first does the best job of representing the color and requires the most bits. The last uses the fewest bits and does the poorest job of representing the scene. However, most enterprise video is perfectly acceptable as 4:2:0. Finally, concerning the last factor, no compression, JPEG 2000, or h.264/5 can be used.

When the encoded digital video stream is created, it is packetized for transmission on the Ethernet network. This stream will be very large if not compressed or only lightly compressed. One source (Belden) gives these examples:

● 8 bit, 4:2:0, 4400X2250, 60 fps creates 8.91 Gbps

● 10 bit, 4:2:0 4400X 2250, 60 fps creates 11.14 Gbps

Packet headers Ethernet, IP, UDP, and RTP will add 4-7% to this rate which would make it seem nearly impossible to do 4K on less than a 10 Gps network. According to Spirent, a well-known manufacturer of network test equipment, the actual maximum transmission possible on a 10G network is actually about 9.7 Gps. With the overhead mentioned, the first format about would need 9.5 Gps. This is dangerously close to the limit and I believe that the IT department will see it exactly that way. If the end devices need such high levels of bandwidth, even using VLANS will only slightly improve the situation. In the core of the network, VLAN trunks between switches would need to be even higher bandwidths. And, I don’t think any network manager would consider allowing this traffic to intermingle with traditional data applications like file transfers or database interaction. It seems inescapable—mixing 4K with the traditional IT network will require compression of the video as is done in H.264 and H.265. My own experiments with H.264 compression on large video files indicates that 50% compression or more can provide adequate video quality for the vast majority of enterprise video applications. This would allow the 4K60 formats above to be transported in 2-6 Gbps. Using 30 frames per second would cut these rates in half, which would require 1-3 Gbps links to the end point and standard VLAN trunking in the core. In my opinion, IT might be convinced to allow this within the architecture of the network.

Phil Hippensteel, PhD, is a regular AV Technology contributor. He teaches at Penn State Harrisburg.

INFO

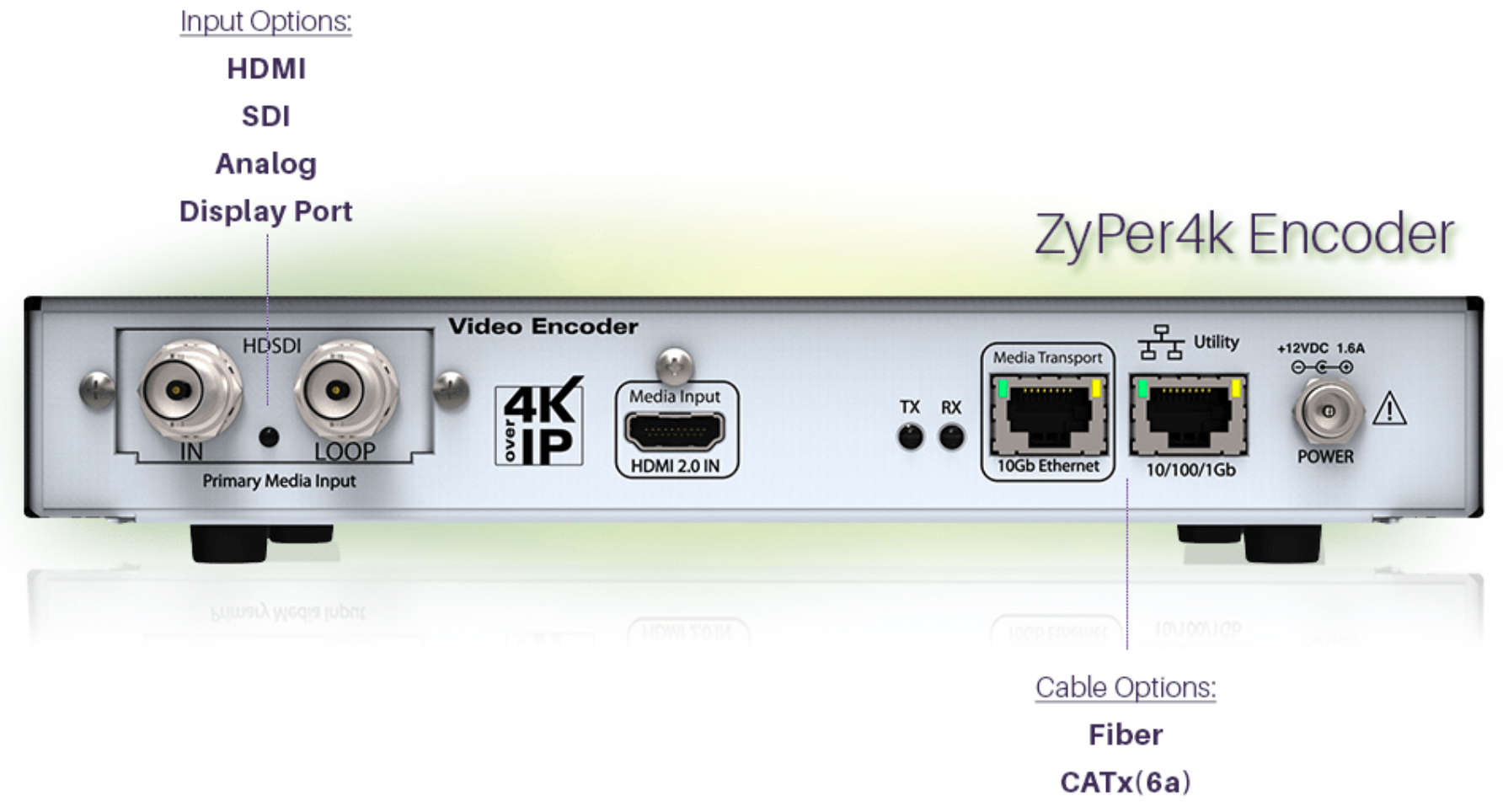

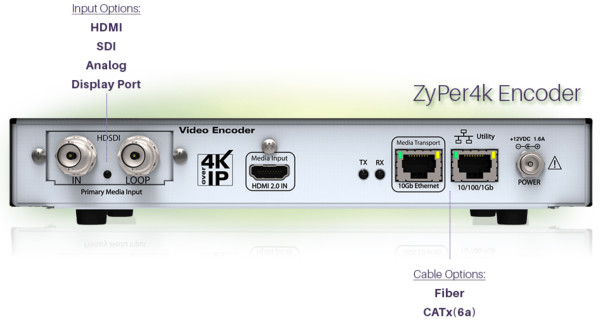

ZeeVee