What the AV Industry Can Learn From SMPTE 2110

While the AV world is approaching IP-based mediatransmission from anumber of different directions, counterparts on the broadcast side of things are arriving at a consolidated solution for their transport needs.

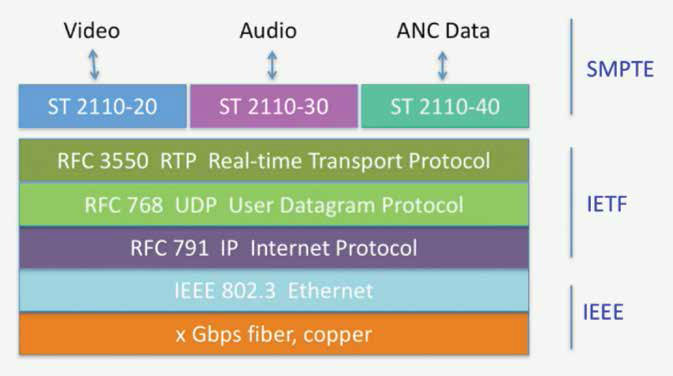

After years of work by several industry organizations, the SMPTE (Society of Motion Picture and Television Engineers) 2110 Standards are being published. The VSF (Video Services Forum), AES (Audio Engineering Society), and EBU (European Broadcasters Union) joined SMPTE in the effort. They also used existing standards from the IETF (Internet Engineering Task Force) and the IEEE (Institute of Electrical and Electronic Engineers) to build a protocol architecture that is open and IT friendly. However, to understand the position of the SVIP (SMPTE Video over IP) suite, we must look at the networks often used to deliver video.

The broadcast industry is focused on the contribution network, where video is created and processed. Typically, they deliver the video to the carrier network made up of regulated service providers. The content is then delivered over enterprise networks or access connections to homes and businesses. What we are inclined to call the AV industry is focused on this last part of the delivery.

As a result, we might wonder why this 2110 effort is of importance to us. But there are several reasons why AV professionals should pay attention to it:

- The needs of the broadcast, pro AV, and AV segments appear to be slowly merging. Users are demanding high-quality, low-latency video with no or little compression.

- The 2110 suite is an excellent example of building on standards previously in wide use in IT.

- Much of what is proposed could be in used current AV implementations that use proprietary technologies.

- While the AV industry is debating the need for 1Gb versus 10Gb networks, the 2110 planners already have incorporated 25Gb, 40Gb, and 100Gb networks in their roadmap for the future.

- The development of the 2110 suite is an example of cooperation between groups to meet a common need. This could be contrasted with the disagreements between several AV implementations that claim to be based on standards.

The SVIP suite has several main parts:

- ST 2210-10 “System Overview”

- ST 2110-20 “Uncompressed Active Video” based on RFC 4175

- ST 2110-30 “PCM Audio” based on AES 67

- ST 2110 -40 “Ancillary Data”

There are other important parts, but considering these four will give us a good education about what the suite is all about. 2110-10, -20, and -30 have been ratified as of IBC 2017. 2110-40 is reported to be very near to ratification and already is in use in many products.

The standards were developed by the JT-NW (Joint Taskforce Networked Media). This was comprised of representatives from the groups mentioned in the beginning of the article. They had some specific goals.

A daily selection of features, industry news, and analysis for tech managers. Sign up below.

- Use of existing IT standards so that commodity Ethernet networks could be used.

- Avoiding the use of proprietary techniques and technologies.

- Preservation of very accurate timing but recorded and transmitted in the RTP (RealTime Protocol) header.

- Transport of separate elementary streams for video, audio, and metadata.

The first goal is illustrated nicely in Figure 1, which is derived from SMPTE presentations. Experienced IT persons will recognize this as identical to the architecture of nearly all existing IP applications.

The third and fourth goals need to be explained more completely. In the current, widely used transport method, MEPG Transport Stream (MPTS), the timing values are imbedded in small MPEG packets, which are in the data field of each IP packet. Also, the audio, video, and data MPEG packets are multiplexed (mixed). So, each IP packet might contain timing, media, and data for any or all of the video and audio sources. Let’s say the media from one of the sources, right audio, needs to be amplified. The stream must be de-multiplexed (separated), processed for amplification, and finally re-multiplexed to be sent forward. This seems to be an inefficient way to handle the separate elementary streams. Therefore, under the 2110 scheme, video, left audio, right audio, and ancillary data are carried in separate IP streams. This doesn’t mean that they will require separate addresses. This can be accomplished by the sending encoder using separate UDP port numbers. But this method makes it easy to access the various components of the user presentation and enhance, copy, store, scale, or otherwise process.

There are some technical details that also should be mentioned. In the audio industry, precise timing is critical. That’s the argument behind AVB and Dante. ST-2110-10 specifies the use of SMPTE 2059 PTP (Precision Time Protocol). 2059 is also based on IEEE 1588, which is widely endorsed by both the audio and video industries. The RTP timing in the IP packets is locked to the PTP time. That guarantees tight synchronization between the various streams. 2110-10 also specifies the use of SDP (Session Description Protocol) stipulated in RFC 4566. This was first used in VoIP as part of SIP (Session Initiation Protocol) nearly two decades ago.

For this article we interviewed Jack Douglass, vice president of business development at PacketStorm Communications. Douglass is chair of SMPTE 32NF-80 DG Interop ST2059 and chair of the VSF and JT-NM Interop Activity Group. He said that the various organizations have been committed to meeting the needs of broadcasters while using as many existing IT (TCP/IP) standards as possible. He described an interoperability lab at NAB 2018 that involved equipment from more than 60 vendors and performed exactly according to the specifications in ST-2110. Because of his company’s belief in the guidance offered by the SVIP standards, they’ve modified their network emulator product to do deep analysis of the 2110 streams. Their product, the Hurricane VIP Media Analyzer, can give very complete descriptions of virtually all the timing, packet contents, and buffer behavior from the transmission point to the receiver point. Douglass also discussed the strict requirements of the Ethernet switches and demonstrated that several off-the-shelf switches failed to meet the latency requirements for latency in relaying Ethernet frames.

AT IBC 2017, a panel of experts from SMPTE and the EBU discussed the 2110 suite. It’s available on YouTube and we recommend that you view it. It will provide a detailed description of the parts of the standard, how they relate to existing standards, and how the standards are expected to be used.

Let’s summarize what we believe to be important about this new suite of standards. They have been developed by broadcasters for the broadcast industry. However, much of what is specified can be applied nicely to other parts of the larger AV industry. Standards developed by one sector being used in another sector is essentially how we developed VoIP, videoconferencing, AVB audio, and adaptive bitrate video. We should recall that the original motivation behind the development of Ethernet was to deliver print files to printers on behalf of Xerox. It would have been almost impossible to imagine that we would use this technology in the myriad ways we do today. The timing methods, encapsulation structure, commitment to open standards, and reuse of existing standards may provide an excellent roadmap for the use of technologies across the AV industry. Therefore, it may be worth pondering whether the wide variety of delivery methods in AV (ABR, SRT, SDVoE, SDI over IP, QUIC, RTSP/TCP/UDP, MPTS with and without RTP) could be consolidated with methods similar to those used by SMPTE.