The Cloud Can Protect Corporate Media Assets—Here's How

Experts say you should plan for disaster with asset recovery policies—and these days, the cloud can be part of your storage model.

While the pandemic has been credited with accelerated cloud adoption, the enterprise market has embraced the cloud for some time.

Sunil Mudholkar, vice president of product management at EditShare, a networked shared storage and workflow solutions developer headquartered in Watertown, MA, noted that in large part, this is because these organizations have been running IT operations on providers like AWS. “What we find is that the cloud knowledge of our enterprise and corporate customers, on average, tends to be a little bit higher than your average post house, who may have had limited experience with the cloud in the past,” he said.

[Cloud Power: Cloud or On-Prem?]

Still, organizations require assistance to optimize the protection and storage of their AV media assets in the event of a disaster, which requires the AV integrators that serve them to be fluent in the cloud. Here are some things to consider.

Evolving 1-2-3 Model

Even the most basic disaster recovery policies should mandate the need for three copies of every asset, each residing in a different location. Liz Davis, vice president of the Media Workflow Group at Diversified, a global technology solutions provider with headquarters in Kenilworth, NJ, noted that as recently as three years ago, she was advising clients to adopt the following model:

Store high-resolution files on premise, utilizing Tier 1 high performance storage for editorial and Tier 2 slow-spinning disk for nearline. A second copy sits in a data center on LTO (Linear Tape Open), while the third copy is sent to a preservation and archiving service, such as Iron Mountain.

Spreading your content out across different storage vendors is the wisest choice that you can make.

Savva Mueller, Telestream

These days, however, Davis is urging her clients to do away with LTO and archiving services in favor of migrating to the cloud. Here is a version of her new storage model:

A daily selection of features, industry news, and analysis for AV/IT professionals. Sign up below.

The high-res file is stored locally, utilizing Tier 1 high performance storage for editorial—or it can be directly migrated to the cloud, utilizing VDI for virtual editorial in a cloud environment and eliminating the on-prem Tier 1 from the start. Davis counsels clients to evaluate their project life cycle, and keep project files and related assets on Tier 1 storage for up to 12 weeks after final render, as there’s a good chance a modified project file will be created or newest assets repurposed).

Meanwhile, the second copy sits in public cloud S3 Hot Storage, such as Wasabi, while a third copy resides in a separate public cloud provider, in cold, less expensive storage. On top of this, Davis layers an HSM (Hierarchical Storage Manager), which automatically moves assets from between storage tiers based on the organization’s storage policy. She says that HSMs also allow for more efficient editing for those who need to access stored assets for repurposing.

“As an editor, if I need a partial file restore of a full-res file to render out a final deliverable, the HSM will pull back that clip from wherever it’s available, the quickest,” Davis explained. “Additionally, a HSM allows for mezzanine workflows, requiring less intensive compute and proxy editorial from a MAM. For example, I can begin editing in the proxy, while my full-res file is being pulled back from one of my storage tiers––the intelligence of the HSM is doing this in the background––and sync the proxy with the high-res file on render.”

This approach, Davis argued, is more cost-effective than on-prem storage or even off-site LTO. Tape-based storage, she noted, requires rack space, heating and cooling, as well as the manpower to maintain it. “It’s not just the initial purchase cost; there’s ongoing support costs for this, and they’re quite pricey,” she noted.

[Exclusive: New CEO Hutto Taking Diversified to the 'Next Level']

Davis added that on-prem infrastructures are also more expensive because they need to be designed for peak. “By nature, that means you’re [incurring] a capital investment for a future that you don’t know exists,” she said. “The cloud will meet your demands when you have them, not when you think you’re going to have them.”

Hybrid Balance

While most enterprise-level clients are cloud-savvy, there are instances where they prefer to keep assets on site. Still, Davis urges her clients to take advantage of the cloud.

In this model, she proposes the deployment of an object storage platform (such as DataCore Swarm, born out of DataCore’s acquisition of Caringo). This enables companies to leverage the cloud while maintaining an on-prem model by either virtualizing media assets on commodity hardware, or even allowing them to create their own private clouds located in several data centers across the organization. “It’s much more scalable, it’s cheaper to run and maintain, and the refresh time [is faster],” she said.

[Telestream Acquires Encoding.com]

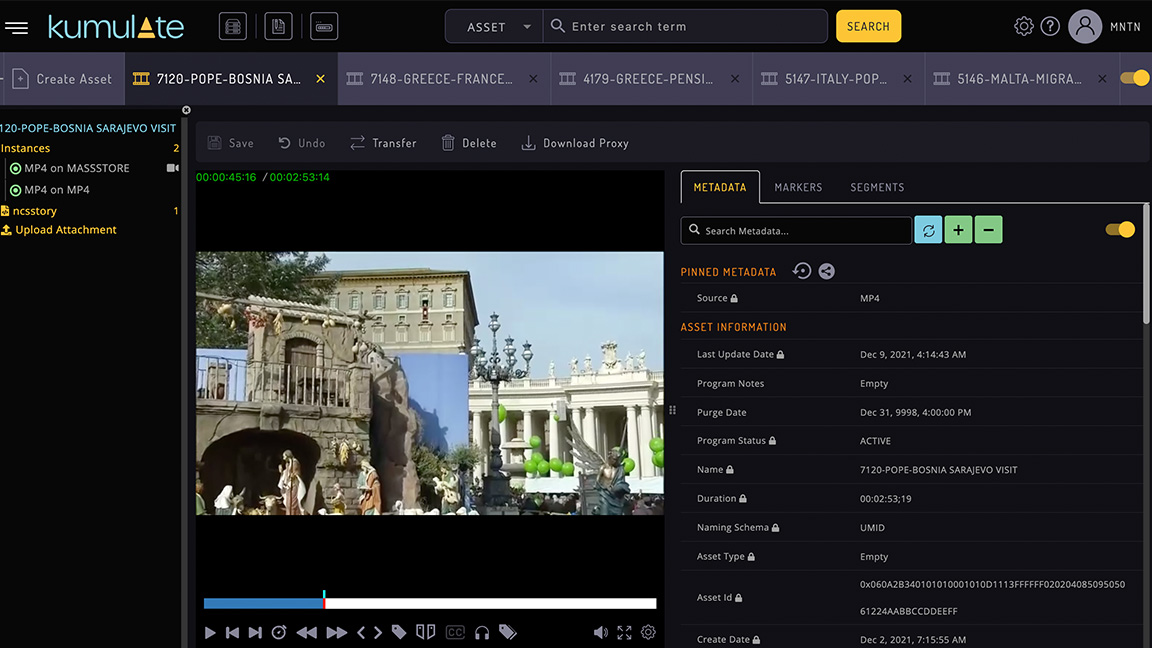

Savva Mueller, director of business development at Telestream, the Nevada City, CA-based developer of the Diva and Kumulate content storage management platforms, relayed that many of his organization’s users opt for a hybrid model. This could involve some type of on-prem solution in conjunction with cloud storage, or cloud storage in a multi-cloud environment.

“Hybrid is important, because nobody really wants to put all of their eggs in one basket,” Mueller said. He added that the multi-cloud approach requires content storage management systems to be able to not only migrate assets to the cloud, but between clouds when necessary.

Regardless of the specific model, Mueller urges organizations to enlist several storage vendors rather than, for example, trusting one vendor to store assets on multiple systems within their own environment. A single vendor solution exposes companies to all sorts of potential issues, such as security issues or cloud-based outages. “That becomes problematic because that one storage vendor now becomes your single point of failure,” he explained. “Spreading your content out across different storage vendors is the wisest choice that you can make.”

Egress Fees and Analytics

While cloud storage may be more cost-effective in many cases, clients must understand their providers’ fee structure related to egress fees, Mueller noted. This requires not only a clear understanding of the provider’s terms, but also of the organization’s own usage patterns. Analytics—something Telestream provides—help companies determine what these are.

“Even with vendors who say they have no egress fees for their cloud storage, there can be ‘gotchas’ because they may not charge egress fees only once the content has been in their system for a certain number of days,” Mueller illustrated.

Telestream’s ability to perform partial file restores addresses the issue of egress fees. “If you’re dealing with an hour-long video file, the ability of the system to restore just the 30 seconds that you need becomes very important, because you’re getting it quickly, and if it’s in cloud storage, you’re egressing a much smaller file than you would have otherwise,” he said.

One of the main issues Davis runs up against is her clients’ reticence to transfer old assets to cheaper storage. Their argument: They never know when they may need those files.

Upon analyzing file usage, however, Davis said that in many cases clients rarely revisit those assets, if ever. She and her team spend a significant amount of time working with clients to develop sound data storage policies that both decrease data clutter and lower more expensive storage consumption costs.

This exercise is critical, Davis said, because storage—even in the public cloud—has its limits. “As we create more data clutter in the public cloud sector, public cloud providers then have to develop more data centers, and we are going to get to a place where we’re beyond capital gains,” Davis offered. “So, I’m a huge proponent of deleting the clutter.”

Phased Deployment

At EditShare, Mudholkar and his team strive to simplify the deployment of content management and storage solutions by breaking up the project into a number of phases. Instead of painting a picture of the ideal model—which, in many cases, can seem overwhelming—he said they make smaller implementations over the course of weeks and then months.

According to lessons learned, this phased approach makes the final goal more attainable, and enables teams to make tweaks along the way. “You’re able to bring them along the journey and make it easier for them,” he explained, “so they don’t feel like they have to make some big, huge, scary commitment when they really don’t know what they’re going to be using in six months.”

This approach is largely influenced by the pandemic, which drove organizations to rethink their planning. “Their long 12, 18, and 24-month IT planning cycles became smaller projects—smaller chunks—because they just didn’t know who was going to be on staff and where they were going to be working,” Mudholkar said. “Personally, I think that’s good for all of us. It makes it easier to plan things. Instead of trying to answer 100 questions about what you’re going to do in two years, you’re answering 10 questions on what you’re going to do in two weeks.”

Carolyn Heinze has covered everything from AV/IT and business to cowboys and cowgirls ... and the horses they love. She was the Paris contributing editor for the pan-European site Running in Heels, providing news and views on fashion, culture, and the arts for her column, “France in Your Pants.” She has also contributed critiques of foreign cinema and French politics for the politico-literary site, The New Vulgate.