Do You Know the Difference Between IPMX and SMPTE ST 2110 (and AES67)?

IPMX is based on ST 2110, which includes audio standards based on AES67. If you are designing or installing an AV-over-IP system, the relationship between these terms is both interesting and important to understand. Read on!

What is SMPTE ST 2110?

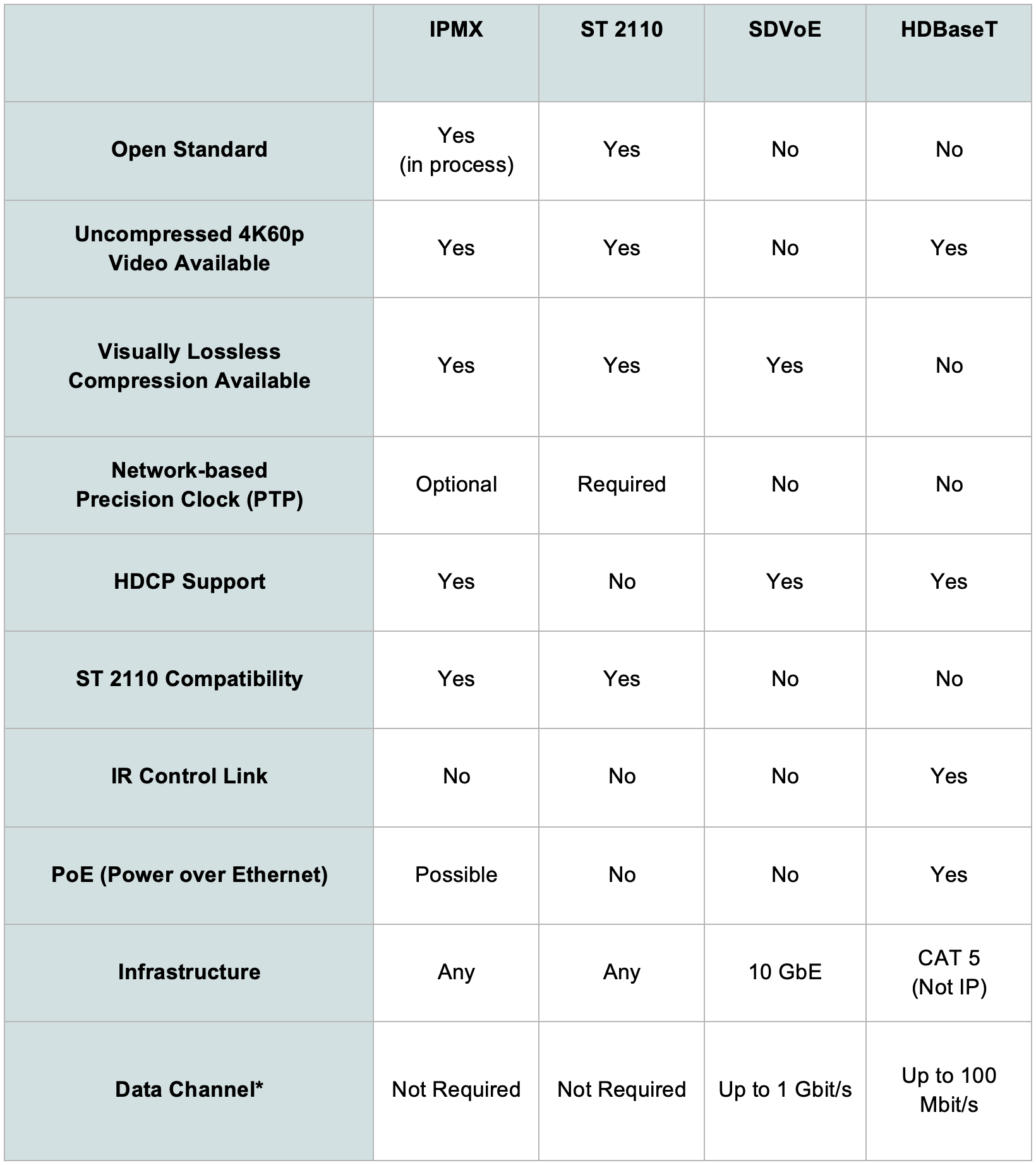

Conceptually, IPMX is an AV-over-IP open standard targeted at the pro AV market, while SMPTE ST 2110 is built for broadcast. However, this oversimplification requires clarification. When we refer to SMPTE ST 2110 systems, we are typically referring to systems that implement a small subset of the standard defined by SMPTE for the entire suite of standards under the ST 2110 umbrella, including ST 2110-10, -20, -21, -22, -30, and so on. While SMPTE ST 2110 does not constrain systems to particular resolutions, color spaces, or frame rates, the reality is that most equipment only supports that small subset. Furthermore, many elements not included in the SMPTE standard are required for systems to work properly.

Therefore, there are two possible interpretations when someone mentions "ST 2110." The first is the standard, which supports resolutions from 2 pixels by 2 pixels to 32k by 32k, about a dozen color spaces, and any frame rate that can be expressed with a "samples over scale" equation. The second interpretation refers to the ST 2110 that is constrained by a document titled, in a way that only an engineer could love, JT-NM TR-1001-1 System Environment and Device Behaviors For SMPTE ST 2110 Media Nodes in Engineered Networks. This document describes the ST 2110 that is commonly used in the broadcast industry. The constraints outlined within TR-1001-1, in addition to the actual standards, are what enable interoperability between vendors in live broadcast production environments.

Constraining and Extending

The approach taken with IPMX is similar to that of TR-1001-1. However, due to the larger market for IPMX, more structure was required, along with a catchy acronym and logo. IPMX defines a base set of requirements for all IPMX devices, as well as a set of profiles, each of which can be thought of as a "TR-1001-1" document for a supported system environment. For a large portion of IPMX, this is accomplished by using and strategically constraining SMPTE ST 2110, as well as AMWA's NMOS API for media node discovery and connection management. We needed 4:4:4 color spaces and RGB color modes, which were already available in the ST 2110 standard, even though systems conforming to TR-1001-1 did not have them. All that was required was to change a few instances of "MAY" to "MUST".

However, simply changing constraints was not enough. New protocols and modes of operation were necessary to support the additional requirements generated by the much larger world of pro AV. Live production does not handle protected content, but this is critical in office and educational environments and so support for HDCP was added. Furthermore, broadcast video systems typically run in a single, uniform video mode synchronized to a single PTP source. While pro AV systems may operate in this way, more commonly the video mode is constrained by the display and may be one of several resolutions supported by the source. Additionally, the source may change the resolution at any time or go to sleep and stop sending video altogether, even though the source is still present and the flow is "connected". The idea of any of this happening in an SMPTE ST 2110 system would give a broadcast engineer the chills.

To meet these additional requirements, IPMX incorporates AMWA's NMOS IS-11 Stream Compatibility Management. This feature carries over the capabilities of EDID and hot-plug detection to the world of multicast IP networks, where the added complexity of codec and bandwidth considerations exist. Additionally, IPMX senders generate and send RTCP sender reports that contain key information about the streams. With this information, receivers can quickly handle changes in resolution and frame rate from the source. This is not possible with the existing SMPTE ST 2110 standard, which assumes that the system's stream parameters are static.

Extending the Timing Model

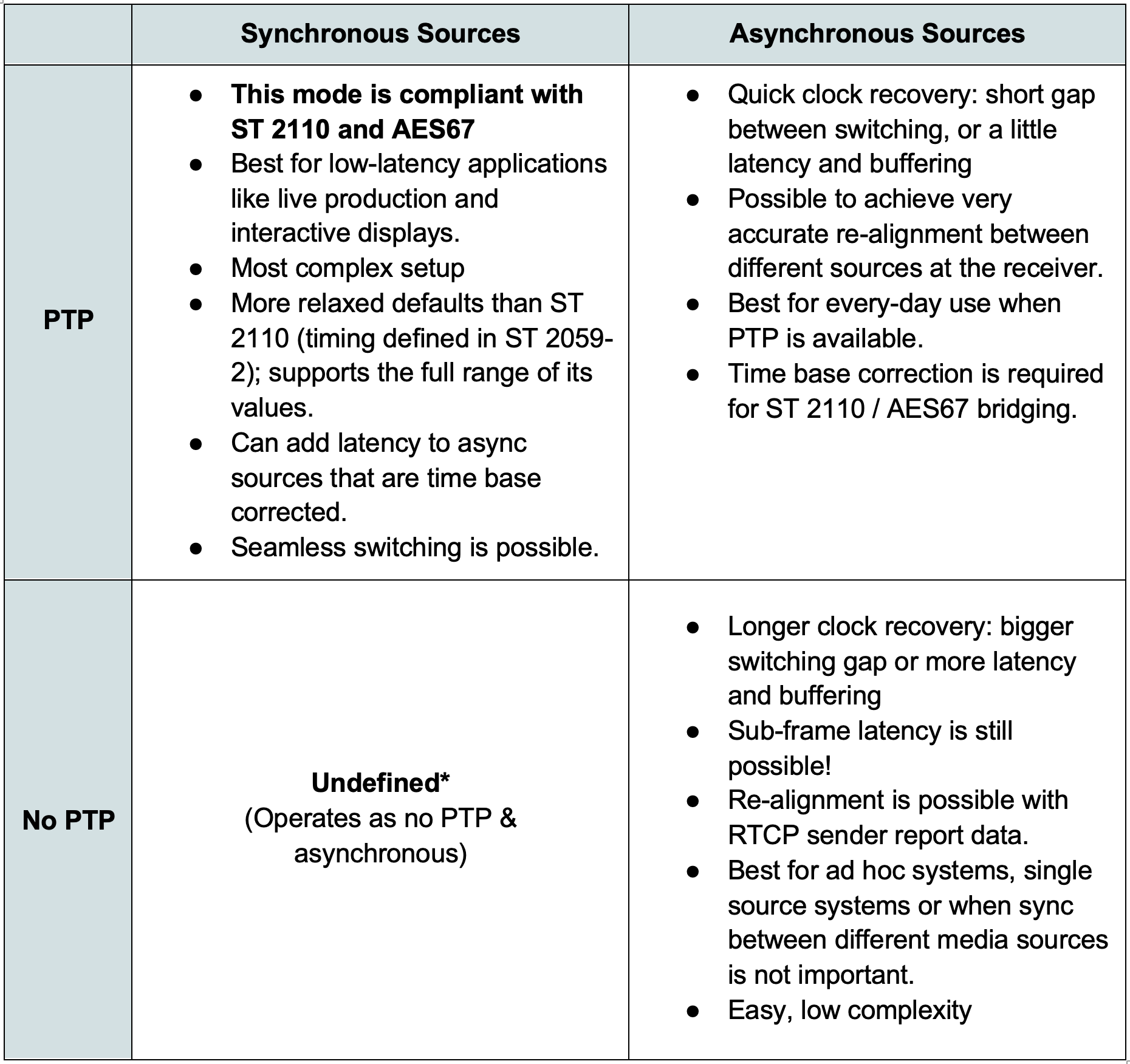

Although there have been a few articles about IPMX's timing model, it's important to highlight the differences in relation to SMPTE ST 2110 and AES67. Both of these require PTP, and neither support asynchronous sources. For IPMX, there are three supported combinations of synchronous/asynchronous sources and PTP presence. This table illustrates the relationship:

Defining how asynchronous sources are handled on the network allows for precise tuning of system performance. For example, an esports arena may choose to stream computer sources asynchronously to minimize latency between the gaming PC and the DisplayPort monitor, which may be running at a high, non-standard frame rate. This would not be possible with ST 2110, but with IPMX, the stream can be kept in its native form and then re-clocked and frame rate converted into a standard video mode for live switching. In another example, it may be more efficient to correct the timing at the source. For ad hoc scenarios where you want AV-over-IP to behave more like HDMI and DisplayPort, the added complexity of PTP is unnecessary.

A daily selection of features, industry news, and analysis for AV/IT professionals. Sign up below.

Working Together

From the beginning, ensuring interoperability with pure SMPTE ST 2110 and AES67 systems and flows was a top priority. When IPMX goes beyond the boundaries of either standard, care has been taken to ensure that re-integration is simple. For instance, even without PTP, asynchronous sources retain timing data that implementations can use to resample and realign content as it flows into the ST 2110-compliant environment.

Also, IPMX devices can send and receive flows that match the requirements of SMPTE ST 2110 or AES67, as long as the content profile is compatible between the devices. This is possible due to the flexibility provided within the existing standards. For instance, it was previously mentioned that IPMX uses RTCP sender reports to convey the timing of asynchronous media flows and partially describe the stream for quick changes, such as when a laptop changes resolutions. In contrast, AES67 and SMPTE ST 2110 do not utilize RTCP sender reports because their media flows are synchronous and unchanging, meaning that there would be nothing of interest to glean from the RTCP packets. However, since both AES67 and SMPTE ST 2110 compliant systems must tolerate RTCP packets, even though they go unused, they can reliably receive IPMX traffic that is synchronous and non-dynamic. On the receiving side of IPMX, as long as the device can handle the media content, an IPMX device should be capable of handling AES67 and SMPTE ST 2110 traffic.

Finally, since both systems use NMOS as the common control plane, users can easily identify which devices generate flows that can be consumed by other devices, and adjust settings accordingly to achieve their objectives.

Full-Spectrum Interoperability

The process of bringing IPMX to market has revealed some interesting realities that many of us had not fully appreciated. Likely the biggest surprise was the amount of live production happening everywhere. Systems that would be at home in any broadcast facility exist in hospitals, schools, houses of worship, and businesses. That reality brings the requirements of interoperability to a new level.

While we started our IPMX journey believing in the benefits of enabling crossover between the broadcast and pro AV industries, we under appreciated the intense need to enable crossover between live production and presentation systems within industries. In fact, it's often difficult to even call it crossover because this hour’s huddle room is next hour’s remote contribution room when it all just works together.

Enabling that kind of interoperability between vendors, workflows, and industries is an impressive feat of engineering and design. In today's world, it's exactly what is needed by professionals everywhere and we are excited to bring it to reality with IPMX.

*For a nice paper on how SMPTE ST 2110 constrained AES67 in a similar way that IPMX does with ST 2110, check out AIMS’s AES67 / SMPTE ST 2110 Commonalities and Constraints.

Andrew Starks is the AIMS Marketing Work Group Chair, and Director of Product Management at Macnica