Using SRT for Live Streaming

From the video production engineer to the IT specialist, everyone involved in corporate video streaming faces a number of challenges. They include dealing with the limitations of unreliable networks, RTMP, firewall traversal, content security, and interoperability with proprietary video delivery systems.

These can become especially cumbersome when trying to get high-quality video between sites or from remote locations over the public internet.

Businesses of all kinds are streaming live events, and in many cases, they require some form of contribution to allow people from different locations to join the live streams as remote presenters. The most difficult part of these live streams is often dealing with unknown networks, like those in hotels or convention centers.

Even those who are streaming via known networks have to deal with the unpredictability of those networks, which can wreak havoc on a live stream if the wrong protocols are used to account for things like packet loss, jitter, physical distance, and firewall traversal.

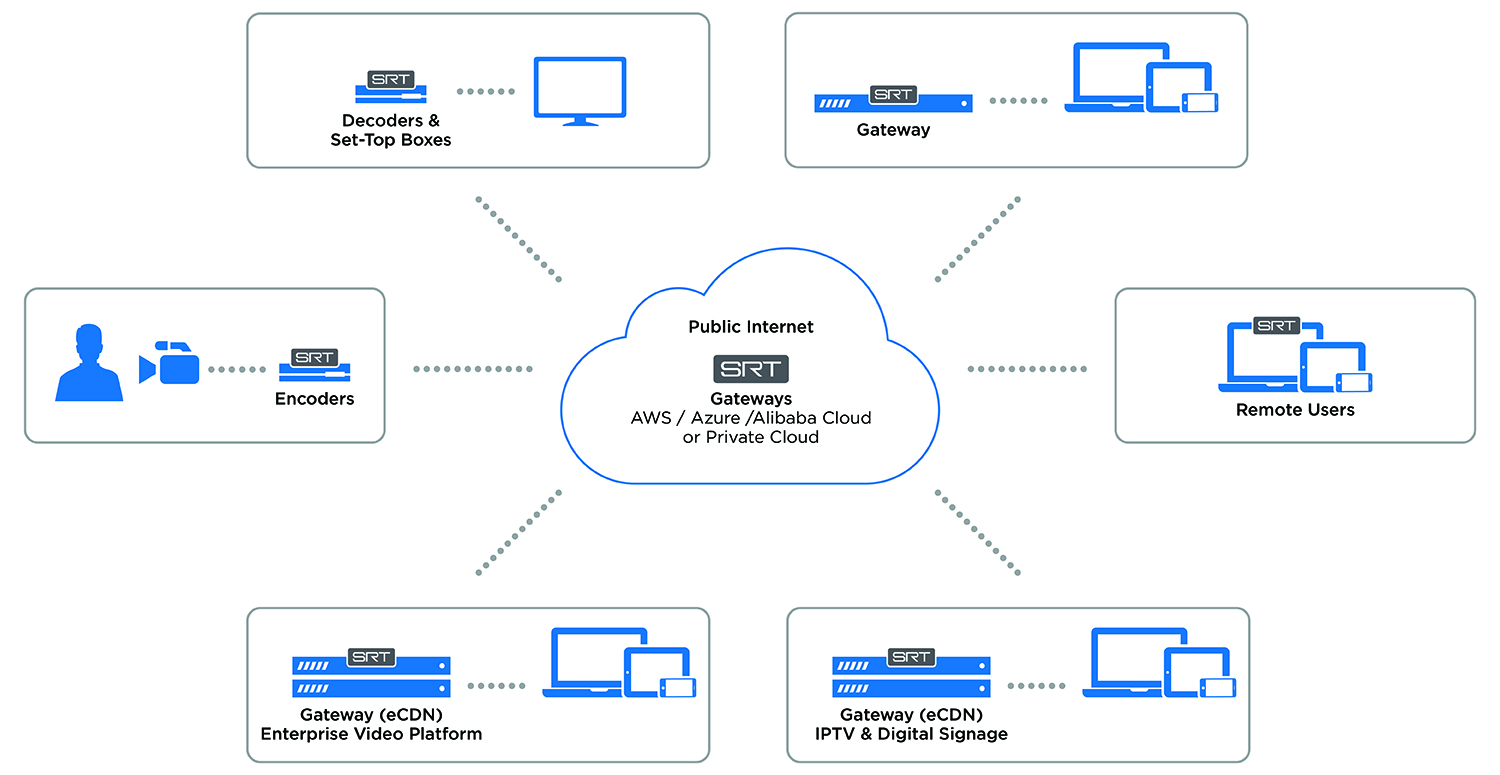

The reality is that everyone has to deal with the public internet at some point in order to deliver a live stream into and across the enterprise video network, thus not depending on dedicated networks. And there are various methods that people are using to try to deal with these challenges.

The Video Transport Challenge

For years, people have been working on different ways of overcoming the issue of unreliable networks in order to deliver low-latency, high-quality video. Today, there are few methods being used:

A daily selection of features, industry news, and analysis for tech managers. Sign up below.

First, Segmented Streaming, a common technology that “slices” video into 3–10-second file segments. As video arrives at the destination point, the files play in order with no break. But the issue is that these segments can start to compound into delays of 30 seconds or more before they start playing at the destination.

Packet Retransmission, built on the TCP/IP protocol, which works well for instances where latency is not an issue. The amount of buffering that it causes makes it unusable for any time-sensitive applications like remote contribution.

Forward Error Correction (FEC)is often used by broadcasters. While it can be effective to recover from loss over very predictable networks, massive bandwidth overhead would be required to accommodate the extreme volatility of the public internet.

And finally, the most common, but unpopular, RTMP. Originally designed to fuel Adobe’s Flash servers and players, RTMP suffers from the impacts of TCP/IP retransmission, and is exasperated anytime the stream is transported over distance, dramatically limiting bandwidth utilization. RTMP also hasn’t scaled to meet the challenges of resolution, new encoding standards (HEVC), firewall traversal, stream security, and language and ad insertion support. In addition, CDNs like Akamai are no longer supporting RTMP.

“RTMP is used by default and not by preference,” said Sam Racine, director of sales, Americas and Asia Pacific, at Matrox. “The industry has been waiting for an open source protocol like SRT, that not only overcomes the challenges of low latency, firewall traversal, and security, but also facilitates interoperability within the video ecosystem of an organization.”

SRT as an Open Source Alternative

The SRT (Secure Reliable Transport) streaming protocol and technology stack was developed by Haivision in 2013 to deal with these very problems. In 2017, Haivision made SRT available to the world as an open source protocol on GitHub, allowing anyone to freely integrate and use SRT to improve their video solutions.

Since then, more than 140 organizations serving the streaming and broadcast communities have shown support for SRT, including global leaders such as Comcast, Brightcove, Limelight, Microsoft, Ericsson, Wowza, the European Broadcast Union (EBU), Matrox, Teradek, and many others. SRT is also supported by open-source applications like VLC, Wireshark, GStreamer, and FFmpeg. Most companies show their support by simply joining the SRT Alliance, an initiative founded by Haivision and Wowza to advance the adoption of SRT within the market.

The primary challenge that SRT solves is packet loss. SRT uses an advanced retransmission technology that minimizes latency while making sure that every packet is received at the other end. Think of it as TCP/IP-like, but designed for UDP-style, low-latency performance.

SRT is a UDP-based protocol specifically designed to deliver low latency video streams over unreliable, “dirty” networks. SRT manages security by providing for independent AES encryption, which means that stream security can be managed at the link level. Firewall traversal is managed by adding support for both sender and receiver modes (RTMP and HTTP support just a single mode) which makes delivery from outside networks to inside office networks—and beyond—much simpler, and with much less intervention from IT departments.

Whether an audio or video stream, HEVC or H.264, SRT is content agnostic. At the packet level, SRT reconstructs the data stream at the receiving end, which allows the feeds to survive packet loss, jitter, and fluctuations in network behavior, all while maintaining low latency.

Another powerful feature is SRT’s ability to report on the changes in network characteristics, such as jitter, packet loss, latency, and bandwidth. In advanced encoder implementations equipped with SRT, this capability is tied to the encoder settings allowing the encoder bitrate to fluctuate in real time to change bandwidth availability.

The Open Source Community Loves to Solve Problems

In one case, an SRT Alliance member used the SRT GitHub forum to ask a question. He was using an HEVC encoder to generate an H.264 and HEVC at the same birtrate/resolution/FPS, etc, but the timestamps of the two streams were off by about 80-120ms. He needed a way to synchronize both streams without using MPEG-TS via UDP and was wondering how SRT could help sync his streams.

After some dialogue with other developers on GitHub, he began experimenting, and within a day, he was able to send a stream over SRT from Europe to the US, where a team received the SRT signal, transmuxed it from SRT to UDP, and then sent it to four monitors at the same time in four different rooms, all of which were “perfectly in sync.”

He also noted that when using a CDN with RTMP with edge caching nodes, the latency was more than 10 seconds. But with SRT, he was able to achieve less than 1 second of latency and believes that there’s room to reduce it further.

This type of community engagement helps make SRT better faster, as more and more developers collaborate to find solutions to their unique problems.

Anyone Can Deliver Low-Latency Video Over the Public Internet

As more and more video streaming technology vendors adopt SRT, proprietary systems are becoming less and less appealing. SRT is already supported by various IP cameras, encoders, decoders, gateways, OTT platforms, and CDNs.

SRT is free and available to anyone who wishes to use it without royalties, contracts, or subscription fees. It is available on GitHub (go2sm.com/srt/) and includes source code, build tools, and sample applications. SRT is fast and easy to implement: One company completed its implementation on their encoder and decoder on the very same day that SRT was open-sourced, while a live streaming syndication company deployed SRT across their cloud platform in a single afternoon.

Those wishing to learn more can visit srtalliance.org.

Peter Maag is EVP and CMO at Haivision.