Quality of Service Part 2: Implementing DSCP (The Easy Way)

This installment of Quality of Service Deep Dive discusses the basics of setting up QoS using DSCP markings.

A daily selection of features, industry news, and analysis for AV/IT professionals. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

In QoS Part 1, we covered the high-level goals and concepts of using Quality of Service (QoS) to make sure that the most important network traffic gets through if the network becomes congested. This installment discusses the basics of setting up QoS using DSCP markings. It is completely switch agnostic and is intended to make it easier to follow the manual by concentrating on the what (you are doing) and why (you are doing it) rather than the how (do I find where this control is) which is the domain of the manufacturer’s documentation.

Implementing DSCP (The Easy Way)

The easiest way to implement DSCP is to have the packets to be prioritized marked by the source devices. It is common for Pro AV gear to provide DSCP markings on audio and video traffic (more common on audio than video). As with network equipment, the marking capabilities vary from product to product.

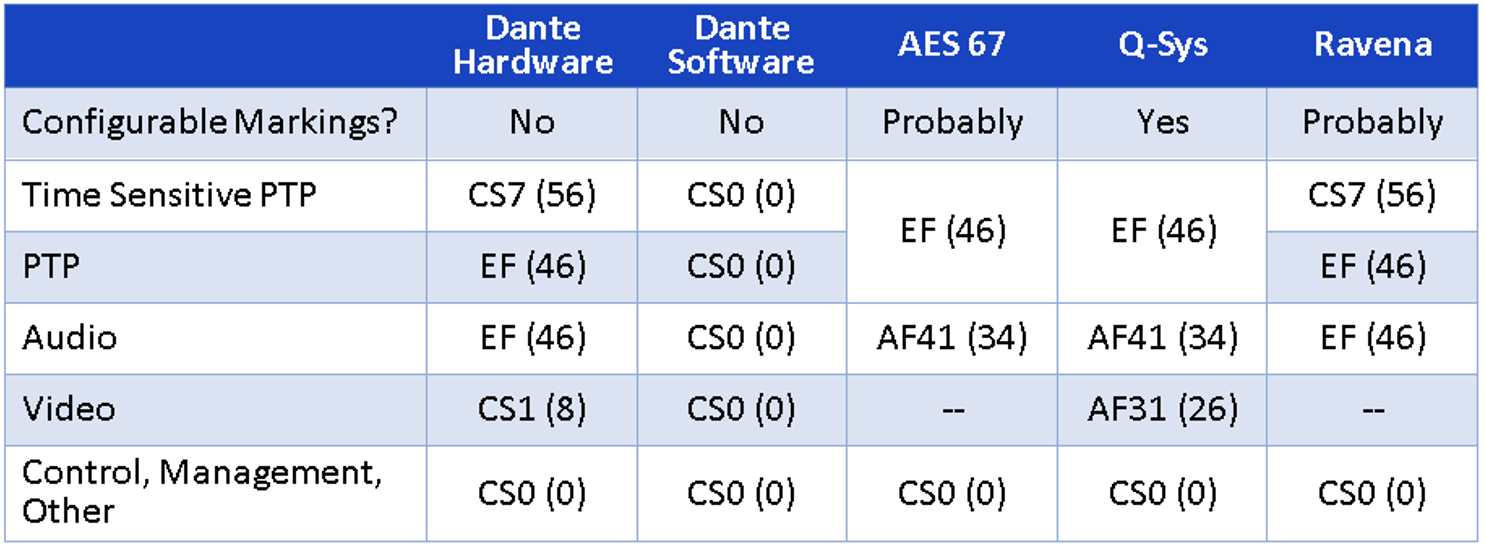

The default DSCP markings for the most common audio protocols used in Pro AV are shown below.

As you can see, there is no real consensus as to the DSCP values to use for the various traffic types. In some cases, you can change the DSP settings from the default values, but in the case of Dante you can’t. AES67 and Ravena are listed as probably configurable, because it is strongly recommended that the default DSCP values can be configured, but the actual configuration feature is up to the device manufacturer. AVB, a protocol used by Biamp and others, is not shown because AVB does not use DSCP. CobraNet is not shown because it does not support pre-marking DSCP and because if you are trying to mix CobraNet with other protocols you have bigger problems.

If you are using a single protocol, you are all set. But if you are mixing protocols on a network, you will need to change your configuration so the markings do not cause a conflict. For example, If you were installing Q-Sys and Dante on the same VLAN you would change the Q-Sys PTP markings to CS7 and the audio markings to EF(46) to avoid putting Dante Audio and Q-Sys PTP in the same queue.

Switch Configuration for QoS

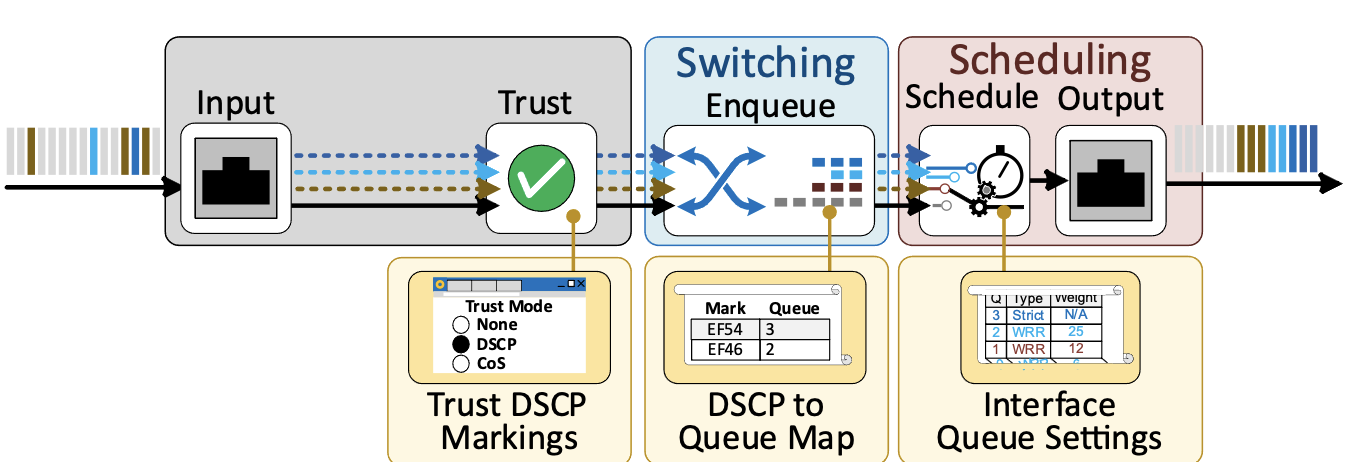

Configuring the switch for when all DSCP markings are applied by the source devices, involves three steps.

A daily selection of features, industry news, and analysis for tech managers. Sign up below.

1: Trusting the inbound DSCP markings.

2: Mapping the DSCP markings to their respective queues.

3: Scheduling the queues at each output.

Trust mode tells the switch port to pay attention to QoS markings on packets coming into the switch and how the switch should interpret the QoS markings. Trust mode is generally off by default. Some manufacturers switches re-mark the DSCP values on packets coming into un-trusted ports, while others just ignore the values.

You need to set any ports with audio protocols to “trust DSCP markings”. This includes any ports connected to the source devices as well as ports connected to receiving devices, as they still need to prioritize PTP. Any switch-to-switch links that carry audio traffic also need to be set to trust DSCP markings. While you can often use a global setting to trust DSCP markings on all ports, best practice dictates that trust only be applied to ports you need to trust. Otherwise, a random device could be marking traffic that is placed in queues you don’t want it in.

If you are curious if a non-audio device is marking traffic you can mirror its port to a computer running Wireshark. The filter dscp!=0 will show any traffic that has DSCP markings.

Imagine having to manage the entry to an event where there are multiple ticket types, VIPs who can’t be inconvenienced, influencers who might be important someday, regular people who paid for their tickets, and the entire 7th grade class from the local Middle school on a field trip. Rather than have a single line which could be disastrous if a VIP shows up right after the bus(s) from the local Middle School. It is better to have multiple lines and have the arriving attendees enter the line for their ticket type. You can then control the wait time by adding ticket takers to the priority lines if they start to get long. QoS queues work the same way. The DSCP markings are the ticket type, output queues are the multiple lines, and the DSCP to Queue Mappings are the signs directing people (and interns helping VIPs) into the right lines.

Your switch will most likely have 4-8 output queues available. Some switches that support AVB may lower the number of available QoS queues when AVB is enabled. These switches are dedicating (usually 2) queues for AVB. Marked DSCP packets are assigned to output queues at the switch level (one configuration with the same queue assignment for all ports). This means you may have more marked traffic types than available queues. If this is the case, you will need to share queues with similar priorities.

In our DSCP (the easy way) example we can put PTP, audio, and everything else into three different queues. Higher number queues have higher priority, so PTP goes in the highest, then audio in the next highest, then everything else in the third queue.

The lowest priority queue is often called best effort (BE) and includes any unmarked traffic (actually marked with a value of 0 (CS0)). There is another very low priority queue assignment strategy that is used in IT, more commonly on wide area links (the ones that cost money), for traffic that has no time sensitivity like offsite backups. This traffic is marked with a DSCP value and assigned to a queue below unmarked, best effort traffic. This queue is given no bandwidth of its own. This is often called Scavenger Class because it is only getting bandwidth when the marked traffic isn’t using it.

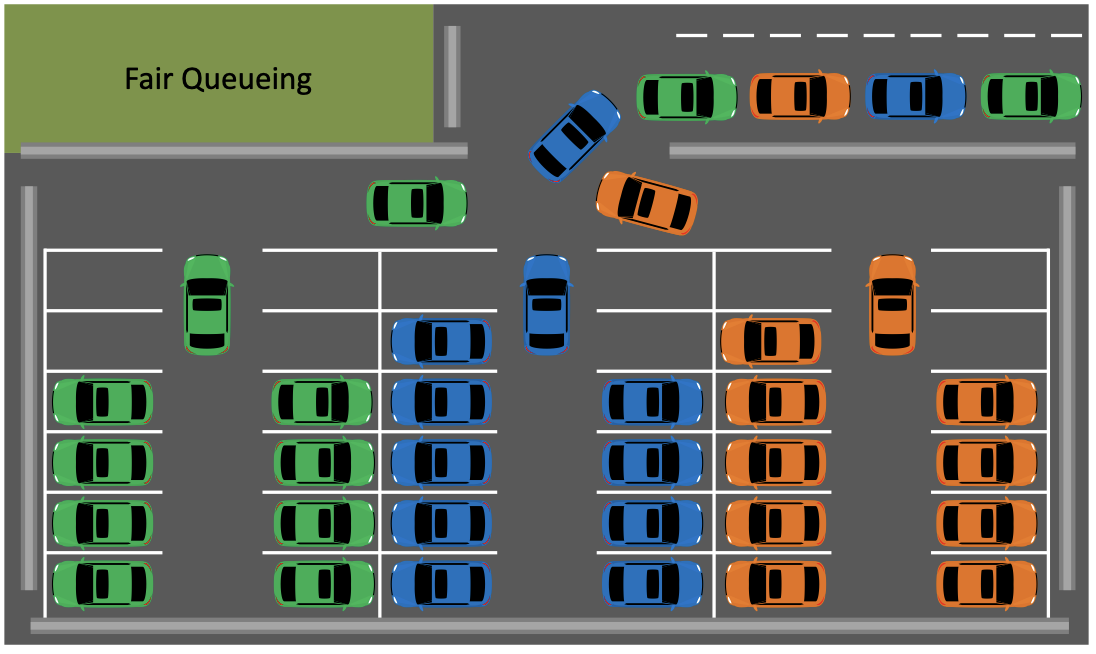

Network traffic is a lot like regular traffic, if you have a lot of cars leaving a parking lot, the fair thing to do is take turns where the traffic merges. In computing Fair Queuing is a set of methods to for processes to share resources by taking scheduled turns and not let one process just run until its finished. Fair queuing is not concerned with priority processes, just that everyone gets a share of the resources. Networks didn’t use Fair Queuing because they already shared resources using FIFO.

When networks matured to the point that QoS was desirable, they borrowed some of the methods from Fair Queuing and added the ability to set priorities resulting in Weighted Fair Queuing (WFQ), the first QoS method that was widely accepted. In Weighted Fair Queuing each queue is given a weight which corresponds to the minimum forwarding bandwidth available to the queue. The weight value is not an absolute guarantee of minimum bandwidth available to the queue. The queue bandwidth is calculated as the queue weight divided by the sum of all queue weights. This results in a proportional bandwidth value for each queue. The scheduler starts at the queue with the highest weight and forwards traffic from that queue until the number of bytes allowed to the queue for that round have been forwarded, or until the queue is empty. It then moves on to the next highest weight queue, repeating until the lowest queue has been service and it starts over.

If any queues are not full, the FWQ cycle takes less time, resulting in more effective bandwidth available for other queues.

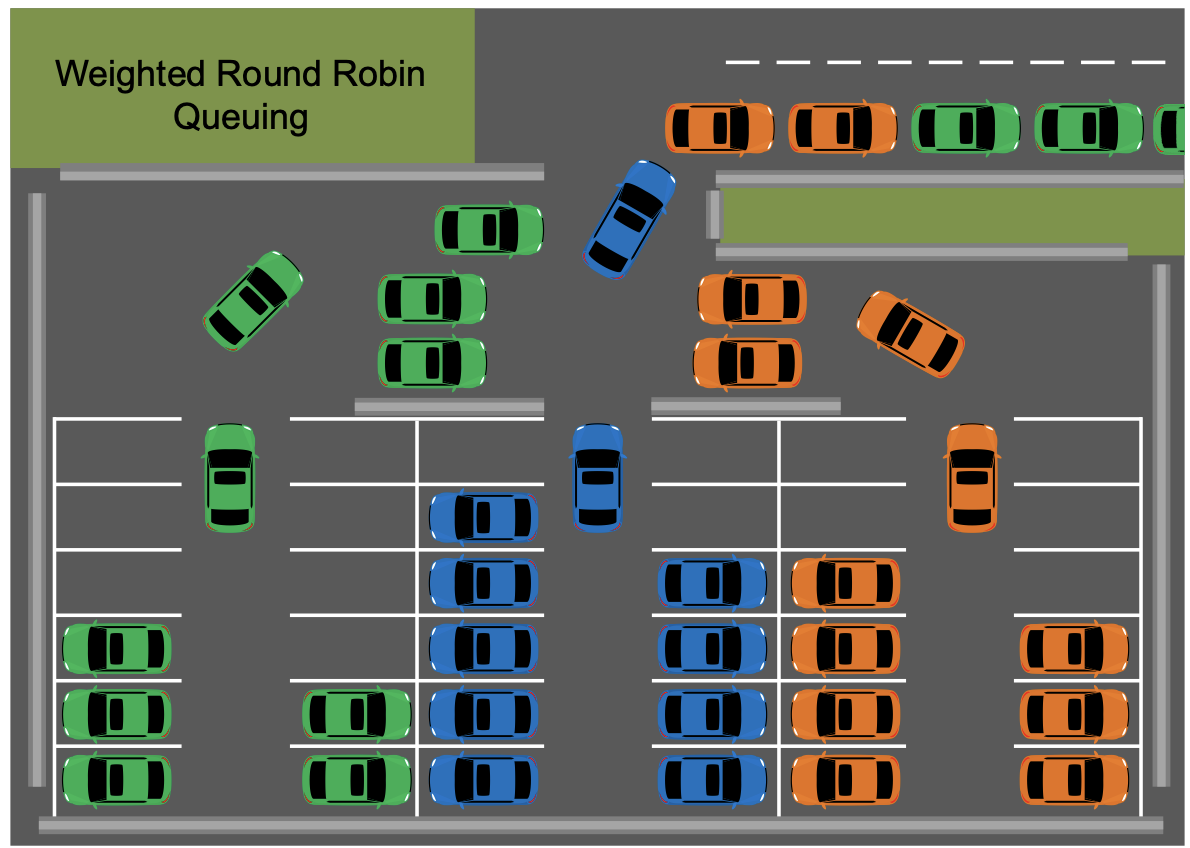

Over the years, many scheduling algorithms have been developed to address various QoS scenarios, but the most common and often only queueing algorithms supported on mid-level switches are Weighted Round Robin (WRR) and Strict Queueing.

Weighted Round Robin (WRR) was developed to address the fact that it takes a lot more computing power in the switch to count bits (bits/time = bandwidth) than it does to count packets. WRR works in the same manner as Weighted Far Queuing but the weight corresponds to the number of packets forwarded bather than the bandwidth. To make configuration easier, some switches still refer to percentage bandwidth in the Weighted Round Robin configuration, but they are just dong the math for you based on a standard packet size.

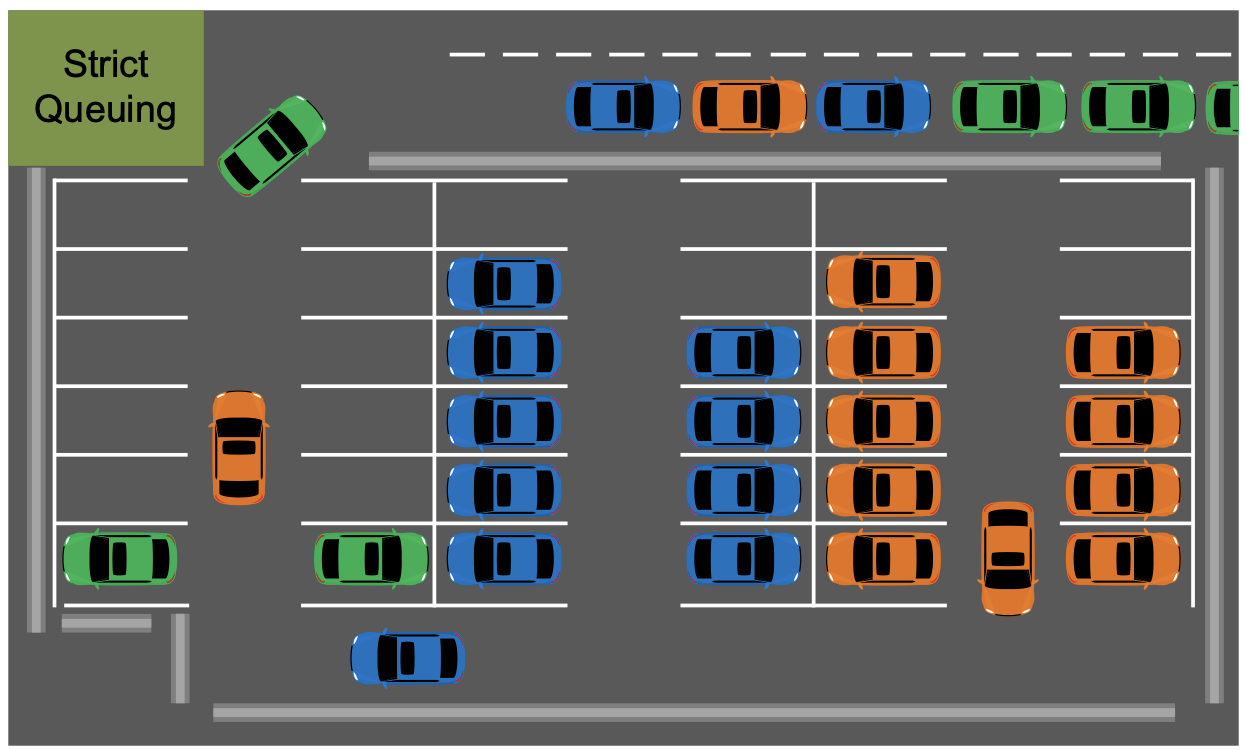

Strict queueing, also called Priority queueing, is the gold standard for prioritizing traffic, but it’s not very fair. The highest priority queue with packets is serviced until it is empty. When a packet arrives in a strict queue, the forwarding of packets from any lower priority queues will stop, and the packet(s) in the strict priority queue will be transmitted.

In practice strict queueing is only used for the highest priority queues and WRR is configured for the remaining queues. This is often called mixed queuing. Typically, you would only have one or two queues configures as strict. The strict queuing cannot be used if WRR is configured on a higher priority queue. Packets arriving in any strict queue preempts the WRR scheduler, even if the packet count has not been reached. The scheduler acts in strict mode until all strict queues are empty and then the WRR scheduling resumes where it left off.

Queuing Strategy

In QoS part one I said, “Quality of Service (QoS)” is comprised of a set of tools and techniques which allow network devices to be configured to identify traffic that needs to be prioritized and to forward traffic based on the needs and priorities of the organization configuring the network.”

I use the term queuing strategy because there is no ‘one size fits all’ set of directions. Different technology mixes and different organizations have different priorities. I once did some work with a large financial firm that prioritized (TCP) market data above everything else. That was their priority.

Assuming that the priorities have been set and the queues defined in the earlier stages, our last task is to set the queue types and weights for each outgoing interface. Queue types and weights can be configured on a port-by-port basis, you may end up using the same configuration on all ports, but you don’t have to. To properly configure the queues, you should consider the following for each port.

>> What are the outbound traffic flows on the port?

>> What are the sensitivities of each outbound traffic flow that made me want to prioritize it?

Delay? Loss? Both?

>> What are the expected peak bandwidths both burst and average of each outbound traffic flow?

>> What outbound traffic flows share queues with other traffic flows?

The importance of QoS and the how critical the answers to these questions rise as the bandwidth and potential congestion goes up.

Since this is DSCP (the easy way), we will talk about simple AV networks where there are no other traffic types to consider, but we will look at the worst case of the port connected to a switch-to-switch link.

From a network perspective, the various networked audio profiles are very similar, you have three Classes of Traffic, PTP, audio and control. Dante and Ravena separate PTP Sync from the delay calculations but mark the delay calculation the same as audio. The delay calculations use so little bandwidth that they don’t really impact anything.

PTP Sync is very sensitive to delay, especially the constancy of the delay. Variations in the delivery time of PTP sync packets, caused by being behind various numbers of packets in a FIFO network directly effects the level of synchronization. The audio streams are also sensitive to delay. A packet must arrive and be processed before the playout time (default 1ms on Dante) or samples will be discarded. If too many samples are discarded, the playback will stop completely. The control data flows are not very sensitive.

Audio bandwidth is not as low as you may think, the audio protocols bundle multiple audio streams within a single packet. A typical 48kHz sample rate 24bit audio data flow with the most common bundle size of 4 is about 5Mb/s, even if only one channel is being used. If audio is being sent unicast, there is a flow for each receiver. For a Gigabit link used only for audio, you can easily run 100+ 5Mbps flows without worry, and could push to 150 with some care. The audio manufacturers will have specific guideline about the number of flows that is reasonable.

For this use case, Setting the PTP (highest) queue to Strict. The Audio (next highest) and control (lowest of the three) queues can be set to WRR. The default weightings for the switch should be fine.

If you are running audio and video across the same network links, there are potentially some issues to consider. The answers to the questions for audio are the same as above.

Real time video has a medium level of sensitivity to latency and loss. If it is synchronized, the common clock is typically based on Network Time Protocol (NTP) and does not require the precision of NTP, and any playout timers are at the scale of video frames (~17-33ms). Video also tends to be more resilient to packet loss than audio.

The potential issues come when audio and video are forwarded on the same network links. Remember, the weight metric for WRR is forwarded packets. This works well with typical IT type traffic because the average packet size over time will tend to be the same across different classes. With audio and video that is not the case.

Characteristically audio is not very bursty and has a small packet size and video is very bursty and has a large packet size. In a typical AV scenario with one 20Mbps video and a single audio flow (up to 4 channels) I am looking at about 6000 packets per second (pps) for audio (~5Mbps with a frame size of 147 Bytes) and about 1750pps for video. This is a packet rate ratio of about a 3:1. This assumes a normal packet size of no more than 1500 Bytes. Some video devices require Jumbo Frames which can be as large as 9000 bytes. A 20Mbps jumbo frame video stream only produces about 300pps for video, a 20:1 audio to video packet rate ratio.

The manufacturer default queues for WRR generally double at each level, so queue 4 would have twice the weight of queue 3. For QoS to be effective with the default settings in the standard packet size scenario, audio would need to be at least 2 queues higher than video, 4 queues higher in a system using jumbo frames.

This effect is compounded by the fact that there tend to be more audio flows than video flows which increases the packet ratio and the fact that video traffic tends to come in bursts. Depending on the video codec, the burst rate, the data rate of a short term spike in network traffic, can be 2-3 times the nominal bandwidth and the bursts tend to be long (compared to other traffic types) because with interframe codecs (H.264/5 and others) the burst is caused by the periodic transmission of a full video frame causing the burst time, the duration of a burst to be up to 20ms. This means that for significant (by network standards) periods of time the packet ratio is even more unbalanced.

Accurately planning for the capacity of this type of network when there is potential congestion can be tricky as there are a lot of variables, and this is ‘the easy way’. You could research and calculate the best weight settings using all the variables, but there is an easy way. For a typical AV network, using the same settings as for the audio only network, except setting the audio queue scheduler to strict will ensure that video does not impact the audio.

Conclusion

I am lazy by nature. I try not to work any harder than I have to. If DSCP (the easy way) works, then I will use it. The problem I see (and I see problems for a living) most often, is that some people will interpret it as DSCP (the only way). The universal fact about integrating technology is that it just works... until it doesn’t. There are many scenarios where the easy way won’t work. The real trick to not work any harder than I have to, is knowing in advance if the easy will work and knowing how to do it the hard way if I have to. That is what I will be exploring in QoS part three, which includes the previously foreshadowed section, Implementing DSCP (The Hard Way), and more. Coming soon to a web browser near you.

Paul Zielie, CTS-D,I is a multi-disciplined generalist with 30 years of experience designing and integrating IT, telecommunications, and audiovisual (AV) solutions.

Over the course of his career, he has had most of the roles in the AV/IT spectrum including customer/end user, IT owner, integrator, designer, managed service provider, distributor, pre-sale specifier, executive, and manufacturer. Because of this extensive real-world experience in every facet of the AV/IT lifecycle, he brings an almost unique perspective on the consequences, both intended and unintended of how product and project features are implemented.

“What I really do for a living is solve problems, little or big, with appropriate technical solutions. I believe that technology is in service to organizational goals and workflow, and not an end in itself, and use that as a guiding principle in my design efforts.”

He is a prolific writer and speaker and was the recipient of the 2015 InfoComm International, Educator of the year and was inducted into the SCN Hall of Fame in 2020. As a consulting solutions architect for AVCoIP LLC, Zielie specializes in working with AV manufacturers to create products that meet enterprise customers' IT requirements.