Manage, Publish, Distribute: 6 Essentials for Online Video

The pitfalls, protocols, and power of online video distribution.

An example of live content streaming from Mediasite by Sonic FoundryYou’ve wrapped up production, edited the content, and just debuted your CEO’s latest motivational video at the weekly manager’s meeting. Now it’s time to push the message out, publishing the content for internal associates, external partners, and perhaps even the public at large. This primer hits the highlights of managing, publishing, and distributing online video content. Along the way we’ll point out several potential pitfalls, from players to protocols, that hinder effective distribution and tracking of content consumption.

1. Know the difference between a player and a server.

I first moved from videoconferencing to online video delivery in the early days of streaming (c 1998). At that time, a format war of sorts raged between Microsoft and Real as both companies wanted to control all three major aspects of the streaming supply chain: the player, the server, and the compression algorithm.

If you were in Real’s camp, you used Real Server, Real Player, and the Real Video / Real Audio / Real Media codec. On the Microsoft side, it was Windows Media Player, the Windows Media Video / Audio codecs, and later Windows Media Services. Apple was there, on the periphery, with QuickTime as an offline video player, which had yet to be transformed into an iOS-based online video player.

Today, the dust in the codec battle has settled, in no small part thanks to the joint-purpose codec known as H.264 (aka AVC or MPEG-4 Part 10), but the player war continues with different participants, with Flash Player versus HTML 5 versus embedded players on popular mobile devices.

The Georgia Southern University Center for Academic Technology Support taps Blackmagic Design to stream live events to the Georgia Southern Eagles’ website and mobile devices. Photo by Jeremy Wilburn, GSU.

A daily selection of features, industry news, and analysis for tech managers. Sign up below.

The good news in all this is that most media servers can output content that can be played on almost any media player. Wowza Media Server, for instance, can serve content to Mac laptops, Windows PCs, settop boxes powered by Google TV, Apple iOS devices (which use HTTP Live Streaming, or HLS), and even Android OS devices, some of which use RTSP, others using HLS, and still others of which use Flash Player for Mobile. The same is true for other servers, such as Adobe Media Server and the Unified Streaming Platform server from Code Shop.

The ability for media servers to transform, or transcode, content from one video codec to another has long been a selling point, but today’s challenge comes in the form of converting from one format to another— via various forms of segmentation—to delivered H.264 content via “plain vanilla” web servers.

Bottom Line: The player war is (almost) over so it’s time to focus on which player and media server combination offer the best overall solution.

2. Differentiate between a protocol and a technology.

The streaming industry has, from the outset, used specialized protocols that are referred to in a series of TLAs and FLAs (three-and four-letter acronyms, not to be confused with the .fla file extension that Flash Professional used).

Recently, though, we’ve seen a move from streaming- only protocols (RTP, RTMP, RTSP) to a series of acronyms that all have the letter H in them (HDS, HLS, DASH). The reason for the letter H is a significant one: each of these new technologies all use the HTTP protocol for delivery.

A live presentation is captured via Mediasite by Sonic FoundryOne common mistake is to assume that a protocol and a technology are the same. In reality, the protocol is what delivers media to a device, while the technology is the chosen wrapper in which the content is delivered. It’s a subtle difference, made a bit more confusing by the fact that formats are part of the mix.

We’ll tackle formats in the next section, but let’s look at protocols and technologies. On the protocol front, when it comes to standards-based protocols, there are two primary protocols for streaming: RTSP (Real-Time Streaming Protocol) and HTTP (Hypertext Transport Protocol). On the proprietary front, RTMP (Real-Time Media Protocol) was popularized by Adobe and used between Adobe’s media server and its Flash Player architecture.

The major issue with streaming-centric protocols such as RTP and RTSP was the fact that corporate firewalls often blocked any content on ports other than 80 and 8080, which were used for HTTP traffic. The inability to guarantee content sent via these streaming protocols would traverse the corporate firewall led the streaming industry to consider ways to deliver content via the HTTP protocol.

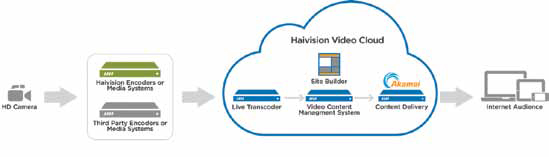

Haivision’s Video Cloud

Several different technologies emerged, all proprietary in nature: Adobe’s HTTP Dynamic Streaming (HDS), Apple’s HTTP Live Streaming (HLS), and Microsoft’s Smooth Streaming.

HLS has been propelled forward by the mass adoption of iOS devices and has been adopted by Google for its Android operating system. HDS has proven to be less attractive but still found a niche within Adobe’s Flash Player installed user base as it offers some unique features. Smooth Streaming was very popular but has since been somewhat deprecated in favor of a standards-based HTTP technology known as Dynamic Adaptive Streaming via HTTP, or DASH for short.

Bottom Line: The HTTP protocol is now well established as a streaming protocol; it even seems to offer a chance to stream content at lower latency rates than older proprietary solutions. On the technology front, though, DASH and HLS will continue to battle for supremacy, with DASH taking the fragmented MP4 (fMP4) approach and HLS taking the MPEG-2 Transport Stream (M2TS) approach to packetizing short chunks of a longer media file.

3. Understand that formats come in two types but many sizes.

One of the most confusing terms in streaming media is the term “format.” It’s no wonder the term is confusing, as it refers both to a container structure as well as the encoding/decoding ecosystem to which that container belongs.

Here’s how to semi-easily navigate the understanding of formats. On the container format front, the first step is to look at the extension. An F4V extension, for instance, denotes an MP4 file wrapped in Adobe’s Flash Media Server (now AMS) format. Likewise, the older Real Media (.rm) or Windows Media (.wma, .wmv) extensions all denote specific player and media server requirements. Even if the content is served from a newer multi-format streaming server, the content often contain a specific set of audio and video codecs.

What about the MP4 container format? As I mentioned at the outset, Apple’s QuickTime is the basis for MP4 and the MPEG-4 System standard. QuickTime Player was one of the first to allow multiple codecs to be played back, and its structure made it easy to add codecs. Unfortunately, the MOV container format designation gave no hint as to what codec would be needed to play the enclosed audio or video file back.

Now that we’ve touched on the container format, what about the use of the term format to describe the media playback ecosystem required to play back particular content? As mentioned earlier, older proprietary systems used unique container format extensions. From these extensions it was easy to determine what ecosystem to use to decode the content.

The issue faced by users of the MOV file extension is actually more pronounced today, given QuickTime’s basis for MPEG-4. Even within MPEG-2, there’s a possibility of several codecs being carried, depending on whether a Primary Stream (PS) or Transport Stream (TS) are being used to transport the media information to the end user’s player.

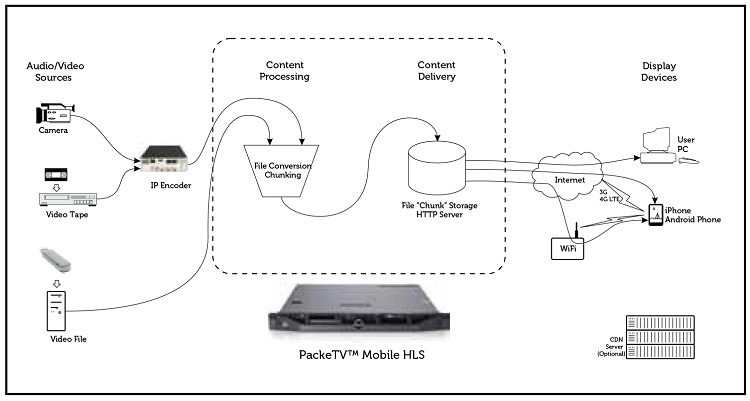

Visionary Solutions designed its PackeTV Mobile streaming media solution to simplify the delivery of live and filebased video content.

The same is true today for MP4 but within a constrained set of boundaries: the vast majority of content with the MP4 designation uses the H.264 video codec and the Advanced Audio Codec (AAC). The older MPEG-2 Part 3 audio codec (MP3) can also be used but AAC offers about double the compression for the same audio quality.

There are a few other areas where multiple codecs can be used—the Bluray Disc, for instance, uses the H.264, MPEG-2, or VC-1 codecs—but there’s a concerted move to adopt H.264 and AAC as the standard codec set, which can then be segmented using the fMP4 or M2TS technology approaches mentioned in the previous section.

Bottom Line: Formats are confusing, but that’s why multi-format media servers were invented—to take the guess work out of streaming content that uses a combination of AAC / H.264 for the base codec functionality. For acquisition and editing, though, you’re on your own to decide between the various formats.

4. Cut through the mobile conundrum.

Mobile video delivery is the fastest-growing area and one where all the issues mentioned in this article come together. From intermittent network connections and varying bandwidths to odd screen sizes and a plethora of formats, mobile video delivery represents a huge challenge.

On the other hand, it also offers potentially unprecedented opportunities. Location-based delivery is still in its infancy, but using integrated “geofencing” technologies like those available on iOS means that content may (and I emphasize may) be able to be restricted to particular markets and geographies. In addition, the growing use of tablets in second-screen or complementary-screen scenarios means that sponsorship and advertising models—or some yet unknown value-added model—could be used to offset the cost of delivering to so many different screen types.

On the mobile front, here are two factors to consider: the operating system and the delivery network.

First, the operating system, with its core media functionality as well as its overall ecosystem of task specific applications, is a key deciding factor. To date, Apple has not committed to the standards-based DASH technology, choosing instead to push its proprietary— and somewhat antiquated—HLS technology. That means anyone looking to gain the advantage of H.264 via fMP4 will need to use fMP4 for all devices except iOS devices. In other words, you’ll need to serve in both HLS and one other HTTP-based technology. In addition, not all implementations of basic streaming protocols are created equal: our testing of RTSP on core Android OS 2.x and 3.x devices yields disappointing and inconsistent results.

The other area to consider is the delivery network, from CDMA to GSM to LTE to Wi-Fi, and the impact that has on bandwidth, quality and resolution. Some users will begin watching your content on one bandwidth, perhaps Wi-Fi, and be able to see the detail in your video content with no problem; when they switch over to a lower-bandwidth network, however, the corresponding drop in resolution may render your content unwatchable. We’ll talk more about that below.

Bottom Line: Mobile represents a challenge rolled up in an opportunity. How you define your mobile strategy will determine the effectiveness of your overall media push.

5. Navigate the confluence of bitrates, frame rates, and resolutions.

There are three primary factors when it comes to maximizing quality while minimizing bandwidth for a streaming video session: bitrate, frame rate, and resolution.

Frame rate is fairly obvious, and most streaming solutions these days opt for 30 frames per second (29.97 fps) due to the tradeoffs of losing fluid motion when dropping to half frame rate (15 fps). But bitrates and resolution are still key to determining whether an end user can view the content.

The easiest way to save bandwidth is to reduce the resolution of the stream to the resolution of most playback devices. If you shoot in 1080p, consider reducing to 720p or even lower: all iPads, with the exception of the newest iPad, have display resolutions below HD. Other devices, such as smartphones have resolutions just barely above the older 800x600 resolution. A reduced resolution stream, even at full frame rate, can be about three times smaller than the next higher resolution.

The other way to address overall bandwidth is to adapt an adaptive strategy. The HTTP-based technologies that we mentioned (HDS, HLS, DASH) are based on an adaptive bitrate (ABR) strategy. ABR is designed to serve up any one of multiple bitrates to an end user, with the proper bitrate being negotiated between the player and the server. If an end user’s device can handle the highest resolution the player prompts the server to deliver the highest resolution; a few moments later, if network congestion interferes with the stream, the player will request a lower resolution or a lower quality at the same resolution, depending on how the ABR strategy has been defined in terms of the number of available versions at varying bitrates and resolutions.

Bottom Line: Choose to lower a resolution before lowering frame rate, but also explore ABR technologies that let you seamlessly offer a range of qualities, resolutions, and bitrates.

6. Dissect the benefits of self-served versus hosted delivery.

The days of difficult media server setups are past, thankfully, as more and more content is delivered via the HTTP protocol. It’s still not as easy as downloading a movie from iTunes, but media server setup and usage has made great strides in terms of being able to generate your own internal solution.

Having said that, it’s good to simultaneously test both internal and external solutions. Most external solutions from content delivery networks (CDNs) are based on the same media servers you’ll be testing internally, especially now as we move towards HTTP delivery. By testing an internal solution, you’ll be better at anticipating the issues you’d face when it comes time to move to a CDN.

I say “when” instead of “if” for two reasons: first, the cost of bandwidth to deliver live streams to your overall constituency will, at some point, exceed the cost of moving to a CDN. That’s the success tax that comes with streaming in a unicast world. Second, CDNs will have other benefits, such as analytics and the ability to replicate your content to multiple locations at a decent price point. The latter provides a chance to safeguard content and speed up delivery, while the former offers you a chance to see just how many people watched that CEO video we talked about at the outset.

In looking at CDNs, though, don’t forget to consider online video platforms, many of which also now offer the benefits of a CDN. Even YouTube now offers live streaming and significant analytics, with a footprint that rivals that of the largest CDNs.

Bottom Line: Whether you go to external media serving immediately, or choose to segment your media services between internal and external customers, don’t be afraid to trial run a test bed media server so that you gain better understanding of what the CDN can offer that you can’t accomplish with an in house solution.

Conclusion

These six areas scratch the surface of media creation and distribution. Yet mastery of these distinctions will help you best strategize the delivery and management tools required to successfully meet your organization’s vision of distributing its content to the widest-possible audience. Pay special attention to the growing demand for mobile delivery. Consider one of the HTTP-based technologies to allow viewing on both sides of the corporate firewall, thanks to the transformation of port 80 and standard web servers into streaming media servers.

Finally, consider the benefits of hosting in-house versus hosted delivery. A number of clients I’ve worked with, once they understood the benefits, have chosen to launch delivery as an in-house project using the newest media servers. Once they accomplished this pilot project and whittled down the challenges of handling various acquisition and delivery formats, they had a much more confident approach to expanding their media delivery to CDN partners.

Tim Siglin is a frequent contributor to AV Technology and Streaming Media. You can reach him via writer@braintrustdigital.com.

Chapter Review

• The HTTP protocol is now well established as a streaming protocol; it even seems to offer a chance to stream content at lower latency rates than older proprietary solutions. On the technology front, though, DASH and HLS will continue to battle for supremacy, with DASH taking the fragmented MP4 (fMP4) approach and HLS taking the MPEG -2 Transport Stream (M2TS) approach to packetizing short chunks of a longer media file.

• Formats are confusing, but that’s why multi format media servers were invented—to take the guess work out of streaming content that uses a combination of AAC / H.264 for the base codec functionality. For acquisition and editing, though, you’re on your own to decide between the various formats.

• Mobile represents a challenge rolled up in an opportunity. How you define your mobile strategy will determine the effectiveness of your overall media push.

• Choose to lower a resolution before lowering frame rate, but also explore ABR technologies that let you seamlessly offer a range of qualities, resolutions, and bitrates.

• Whether you go to external media serving immediately or choose to segment your media services between internal and external customers, don’t be afraid to trial run a test bed media server so that you gain better understanding of what the CDN can offer that you can’t accomplish with an in-house solution.

• Pay special attention to the growing demand for mobile delivery and consider one of the HTTP-based technologies to allow viewing on both sides of the corporate firewall, thanks to the transformation of port 80 and standard web servers into streaming media servers.

• Finally, consider the benefits of hosting in-house versus hosted delivery. A number of clients I’ve worked with, once they understood the benefits, have chosen to launch delivery as an in-house project using the newest media servers. Once they accomplished this pilot project and whittled down the challenges of handling various acquisition and delivery formats, they had a much more confident approach to expanding their media delivery to CDN partners.