Byte-Sized Lesson: Network Buffers

In the AV industry, we often think of the delivery of audio or video in terms of three major components: the source, the network, and the receiver or playout device. Many researchers in the IT industry recently have changed their view about the delivery of data. The current idea is that insufficient recognition has been given to the importance of network buffers. The AV industry is using the same IP protocol stack as the IT industry, so shouldn’t the AV industry be studying the role of buffers as well?

Buffers are temporary storage modules used to hold data while it awaits processing by a device. For example, a switch or router has input and output buffers for each interface. The sending encoder, computer, or camera has both input and output buffers. Buffers vary in size. Router buffers routinely range from 16 megabytes to 256 megabytes. As a result, there often can be dozens, hundreds, or even thousands of packets in a buffer at any instance.

Related: Byte-Sized Lesson: Security and TCP Handshakes

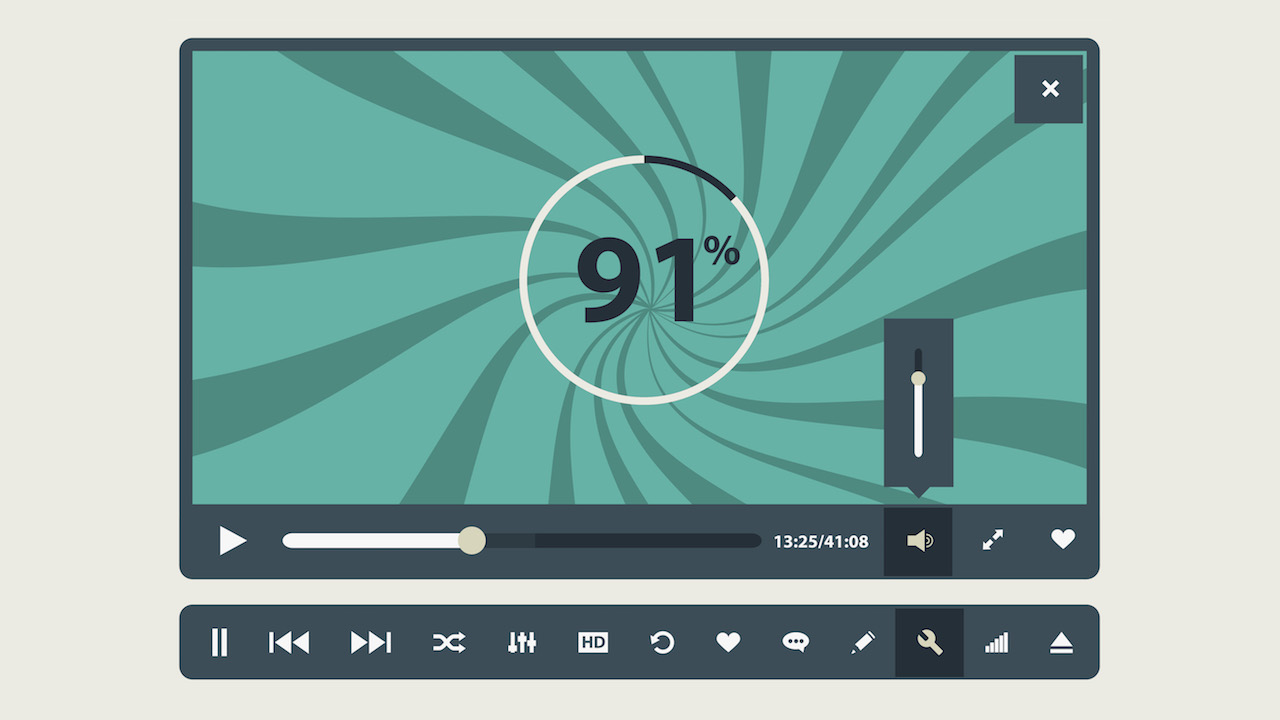

While buffers are necessary to prevent dropping packets, they also add delay or latency in the delivery of the packets. The research about buffers in the IT industry focuses on two things: (1) How big should buffers be to accommodate a wide variety of traffic types? (2) How should the sending device estimate the size of the most congested buffer in the path? The second of these questions has been the focus of intense study and has caused some recommendations for changes in the protocols.

So, why the concern about the most congested buffer? Nearly 90 percent of worldwide network traffic is carried by the TCP protocol. This protocol estimates the amount of data it should be sending based on the following idea. We will start with a low level and increase it rather aggressively until we get a report that a buffer was too congested (overfilled) and dropped a packet. Then, we will cut our sending rate by 30 or 50 percent and start increasing the sending rate again. However, in modern gigabit and 10-gigabit networks over ever-increasing distances, the algorithm has a severe problem. The notification that a packet was dropped by a buffer may arrive at the source long after it was sent. Consequently, hundreds of additional packets may already be in transit. If the problem buffer is full, it is likely that these packets also will be dropped. This also means that the retransmission of the first dropped packet will be delayed by being in line behind the additional sent packets.

Currently, Google and some others are recommending significant changes to the TCP algorithm. In their design, dropped packets will not be the trigger for reducing the sending level. It will be based on trying to estimate the size of the bottleneck buffer and maintaining a sending level that avoids overfilling that buffer. However, getting widespread adoption of a modified TCP algorithm could take considerable time.

Phil Hippenstel, EdD, is a regular columnist with AV Technology. He teaches information systems at Penn State Harrisburg.

A daily selection of features, industry news, and analysis for tech managers. Sign up below.