Understanding Video Codecs

A daily selection of features, industry news, and analysis for AV/IT professionals. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

CODEC is one of those newly invented words that's only been in the dictionary for a few years, yet what it represents may be one of the most important pieces of technology in all of AV. There are at least two derivations of the term codec - some believe it's a contraction of the words "compression/decompression," and some insist it comes from "coder/ decoder." Some people think of the set-top box attached to a videoconferencing display, and some envision a codec as a bunch of programming code. All are correct, and no matter how you interpret the word, a codec is a critical component in the process of digital audio and video signal transmission.

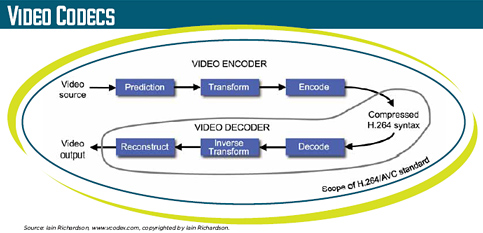

A video codec is a combination of hardware and/or software that creates a binary stream of data that represents the video and audio captured by a camera. Encoders differ from capture devices primarily in what they are intended to create as output. A capture card usually creates a binary stream that will be stored as a file. An encoder usually creates a stream of data that is to be transferred to a second device. This second device has various names such as set-top box or decoder, but it essentially reverses the process carried out by the encoder and re-creates the representation of the scene picked up by the camera.

Video codecs basically do three things. If the camera is analog, they sample the output signal. The rate at which this is done is referred to as the sampling rate. Each sample is then converted into a certain number of bits (often 8) during the analog-to-digital conversion process (A/D). This is called quantization. Finally, the codec must do compression on the resulting bit stream because it is usually too much information to be efficiently transmitted.

Before any of this processing occurs, the image must be captured by the camera, whether it's a pan-tilt-zoom camera, a videoconferencing camera, or a digital camcorder. In the U.S., the technique is based on the same process devised in the 1940s when only black and white images were possible. The camera sensor makes scans left to right to create lines, and then scans vertically to record about 480 lines per scene. This creates video frames at the rate of 30 frames per second. The number of positions on the line where the samples are gathered is usually 720 in standard definition TV. Each sampling point on a line is one pixel and corresponds to a position on the output device (video monitor or TV) that will be energized. In monochromatic (black and white) TV, only the brightness of the sampled position needs to be represented. However, when color was added, the chroma value was also captured, which consists of a representation of the amount of red, blue, and green measured by the camera. Through a clever mathematical computation, it was determined that these four signals could be derived by the receiver if only three were transmitted. You can think of the three - Y, Cr, and Cb - as brightness, the red signal, and the blue signal.

- SAMPLING

- Imagine the image seen by the camera as being covered with a grid consisting of 480 horizontal lines and 720 vertical lines. The camera could take a sample of brightness and chroma at the center of each square. If each sample were represented with 8 bits for brightness and 10 bits for each of the three colors (10 bits per color is common in the industry), the resulting bit stream for 30 frames/second would exceed 400 Mb per second! This would clearly not be appropriate for a single standard definition (SD) TV signal. Consequently, the CCIR-601 recommendation adopted in 1982 allowed for a sampling technique called 4:2:2 in which the luminance signal is sampled at 720 positions on each line, but each chrominance signal is sampled at alternate positions 360 times per line. An additional sampling technique called 4:2:0 modifies this procedure and samples the chrominance on every other line vertically. These techniques are called color subsampling.

So, why is the brightness sampled more often than the color? Our eye is more sensitive to changes in brightness than it is to changes in color. Therefore, the picture seems more accurate to our mind if we have more data about brightness than about color.

These techniques (and many others) were first specified by the Motion Picture Experts Group (MPEG). This group's mission was, and continues to be, to standardize compressed audio and video. The MPEG methods also account for our sense of motion. Since we are more sensitive to horizontal motion than to vertical motion, more information is transferred to the receiver about horizontal movement. The general category of methods that are based on the way we perceive things is called perceptual coding. Color subsampling and motion estimation are examples of perceptual coding. Such sampling and quantization creates the bit stream that is sent to the part of the codec that does compression.

COMPRESSION

There are two categories of compression: lossless and lossy. Lossless compression allows the data to be represented in fewer bits but also allows the procedure to recover all of the original data when it is decompressed. Zipping a file and disk compression are examples of this. It is based on a rather simple idea. Nearly all data is represented using an ASCII system in which each character is eight bits. In nearly all text information some characters appear more often than others. For example, space, e, and t are very common. Therefore, in lossless compression, such letters are represented in fewer than eight bits while characters that are uncommon such as z and q are represented in more than eight bits. Lossless compression rarely yields more than an 80 percent reduction in the number of bits needed. So, it is never used for audio or video compression. The apparent exception is in the G.711 voice codec used in most digital phones. Here, the codec produces a relatively low bit rate stream (64 kb/s); however, the stream is not compressed.

A daily selection of features, industry news, and analysis for tech managers. Sign up below.

Instead, audio and video are compressed with a lossy compression technique such as described in MPEG-1. For example, values such as those sampled for brightness are sorted and stored based on how much they vary from the average value in the set. Let's say the values in the set are 128, 125, 128, and 131. They could be represented as 0, -3, 0, and +3. This does two things. First it lowers the values that must be processed. Second, it generally creates many values such as 0, -1, and +1 that are repeated. After these lower values are ranked from high to low, the low values are simply discarded. The receiver can use 0 to replace them when the original brightness values are reestablished without introducing too much error in the video signal.

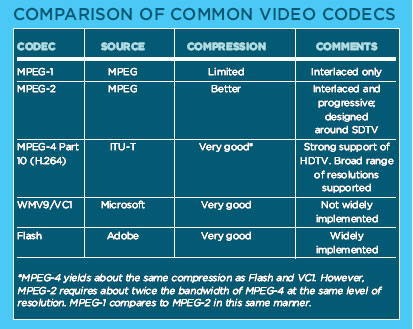

COMMON CODECS

Most video codecs are based on one of the standards and are usually named for the one that their technique follows. (See sidebar "Comparison of Common Video Codecs.") For example, you can download software MPEG-1 codecs from the internet. Most hardware video encoders in use today are using an MPEG-2 codec. You need to select the codec based in part on what the receiver can decode in the decoder or set-top box (STB). Next we will discuss what is specified in the standards.

In MPEG-1, the video compression supports only progressive output devices. These devices display 30 full frames per second. Older TVs displayed 60 half-frames per second, showing the odd lines first and then the even lines. This was called interlaced format. Newer TVs that are interlaced have an "i" designation as in 720i or 1080i. So, MPEG-1 codecs cannot have the output displayed on older standard CRT TVs. On the other hand, computer monitors are progressive and will display MPEG-1 streams. Also, MPEG-1 first described a technique that involved predicting the position of an object in the scene based on where that object first appeared to remove spatial redundancy. Suppose the camera presents 12 frames of data to the codec. In the first frame an arrow is being released from a bow. In the fourth, the arrow is 30 feet in front of the bow. The codec records nearly all of the information about the first frame and the new position of the arrow in the fourth frame. The receiver calculates the position of the arrow in frames two and three. That information is not included in the MPEG stream. This method allowed three kinds of frames to be created. I frames are essentially JPEG images of the entire scene, as in frame one. Frames with predicted information, such as frame four, are called P-frames. Finally, frames that are reconstructed based on previous and subsequent frames such as frames two and three are called B-frames.

Using techniques like this allows for a significant reduction in the amount of information that must be sent to the receiver. While a typical I-frame might be several tens of thousands of bytes, a P-frame might be half that much information and a B-frame might be as small as several thousand bytes. The main variable in the size of the frames is the amount of variability in brightness and color and the amount of motion between frames.

WHAT IS H.264?

H.264 is an industry standard for video compression. Recommendation H.264: Advanced Video Coding is a document published by the international standards bodies ITU-T (International Telecommunication Union) and ISO/IEC (International Organization for Standardization/International Electrotechnical Commission). It defines a format (syntax) for compressed video and a method for decoding this syntax to produce a displayable video sequence. The standard document does not actually specify how to encode (compress) digital video - this is left to the manufacturer of a video encoder - but, in practice, the encoder is likely to mirror the steps of the decoding process The H.264/AVC standard was first published in 2003. It builds on the concepts of earlier standards such as MPEG-2 and MPEG-4 Visual, and offers the potential for better compression efficiency (i.e., better- quality compressed video) and greater flexibility in compressing, transmitting, and storing video. (Source: Iain Richardson,www.vcodex.com, copyrighted by Iain Richardson.)

The MPEG-2 standard described a codec that supported interlaced presentation and improved the compression algorithm. It also increased the levels of resolution supported and introduced a framework for the formats used in high definition TV (HD). This is currently the most widely deployed codec. MPEG-4 and its variation H.264 improved the compression algorithm even more and expanded the range of supported resolutions and frame rates. With H.264, there are formats to support mobile devices like cell phones that require only 56kb/sec. On the other hand, there are formats for production studios that offer such high quality that they are streamed at 27 Mb/sec.

Finally, a word about encoders. There is little difference between an encoder and a video codec. In common usage, a codec is a device that does the quantization and compression. An encoder includes this function but usually does even more preparation of the stream such as the creation of the IP packets that are sent. More often than not, the terms are interchangeable.

Phil Hippensteel is an industry consultant and researcher. He routinely presents at major conferences and is an assistant professor at Penn State University. He can be reached atpjh15@psu.edu.

The AVNetwork staff are storytellers focused on the professional audiovisual and technology industry. Their mission is to keep readers up-to-date on the latest AV/IT industry and product news, emerging trends, and inspiring installations.