Smarter Searching & Managing Metadata to Optimize ROI

A daily selection of features, industry news, and analysis for AV/IT professionals. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

With organizations spending an increasing amount of financial, technological, and human resources on video production, the logical next step is to put these video libraries to good use. Both clips and long-form presentations can be re-accessed and re-purposed virtually ad infinitum, be it for training, marketing, sales, internal and external communications, and educational purposes. The challenge: now that you’ve got all of this video, how do you ensure that your users can actually find it when they need to?

Kaltura says that its CaptureSpace helps create searchable, interactive video that can be viewed on any device at any time. Traditionally, those producing the video are charged with entering metadata, and, like everything else, you only get out of it what you put in. If someone’s search is based on a certain term, the video may not show up in the results if it hasn’t been adequately tagged. The other issue is that while the video may pop up on the list, the relevant piece of it—the phrase “quadratic equation” that someone is specifically searching for—may be 50 minutes into an hour-long presentation, rendering search, and access cumbersome. To address this, some video management platform developers are offering features that enable users to search based on speech—what is actually being said in the video—to narrow their search time. But how reliable are these solutions?

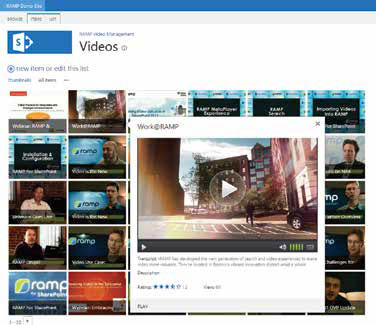

RAMP, the Boston, Massachusetts-based developer of the MediaCloud, MetaQ, and MetaPlayer video management technologies, says that it enables users to ingest an audio or video file, and automatically create a time-coded transcript that assigns thumbnails to every 10 seconds of video. The thumbnails are then tagged with time-coded entities—people, places, or things—and recall can be based on the spoken word. “It becomes a fingerprint of metadata that wraps around every video file that we process,” explained Tom Wilde, RAMP’s CEO. “Now, all of a sudden, you have a video that can actually behave a lot like a document because it has this rich, time-coded payload of metadata with it.”

One growing aspect of automated speech-to-text systems involves organizations that need to communicate in a number of different languages. “If you have metadata around your video in English, that metadata can be used to translate into other languages and give more access to people for your video content,” Wilde explained.

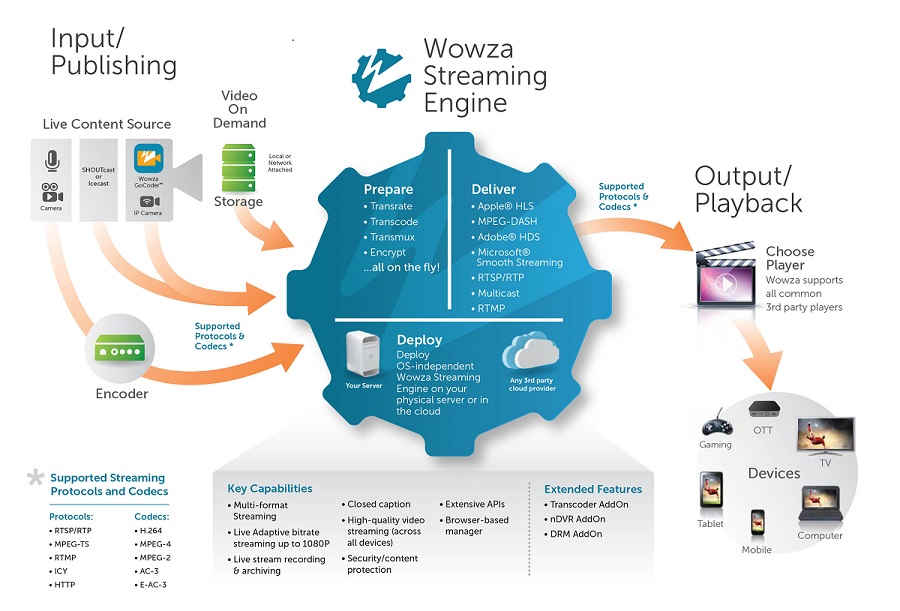

Wowza streaming work-flow Whether or not your organization would benefit from speech-to-text functionality depends on how much video you’re producing, and how long the productions tend to be. “If you’re just getting started, or you’ve got on the order of hundreds of videos, user-entered metadata is probably fine for you—you’re going to be able to find the content you need that way,” said Andy Howard, founder and managing director of Howard & Associates, a video consulting firm based in Madison, Connecticut. However, if your videos are numerous and lengthy, that’s another story. “If they’re an hour, hour-and-a-half long, being able to drill them down into sections is very important.” Lecture capture in the education space, or companies producing highly technical engineering videos, are two examples of where speech-to-text features are extremely useful.

For those organizations that aren’t in refresh mode, and therefore can’t yet take advantage of speech-to-text capabilities, Howard advises tech managers to encourage their users to optimize the tools at hand. “Really think about the terms and the things that you are talking about that are going to be searched, and make sure you have a lot of tags and keywords if you have that functionality available,” he said. He also counsels tech managers to encourage end-users to rate and comment on the videos they watch, which results in pushing the strongest material to the top of search listings. It is similar to SEO in a few regards.

When tech managers are planning for a video refresh, consult with your vendor to see if they do offer advanced speech-to-text, or if this feature is something on their product road-map; based on your current and projected needs, if they don’t, you may have to switch to a new solution. “Start planning for it now, because it takes a while to upgrade the technology or to transition to a new platform.”

A daily selection of features, industry news, and analysis for tech managers. Sign up below.

RAMP offers an end-to-end, cloud-based video content platform to enhance datadriven viewer experiences. Streaming engine developer Wowza Media Systems, headquartered in Evergreen Colorado, enables video streams to be accompanied by a caption stream: at the source, the live text is captured as captions, which are delivered in the encoded stream. “When it comes in, we repackage those captures to match the output streams,” explained Chris Knowlton, Wowza vice president and streaming industry evangelist. This same capability is available in the company’s on-demand offerings as well. “If there is a captions file associated with it—either the captioning is embedded in the video, or if there is a sidecar file of captions created during the event or after the fact, we can take those and pull them into an on-demand presentation in the various formats we support.” He notes that captioning is popular among organizations addressing viewers who are on the go, and who aren’t necessarily able to hear the content very well from where they’re accessing it. In these applications, the captions provide another way for viewers to consume the videos they need to watch.

Wilde argues that the ROI on technologies like his company’s is pretty straightforward. “Imagine if Google had built a search engine that only indexed the title of the documents that it found, rather than [what was in] the body of the documents. That’s sort of been the state of the art of video search historically,” he said. ROI for more advanced systems is centered on three factors, he goes on to explain: content discovery (if someone can’t find your content, it’s useless); engagement (keeping viewers engaged long enough to watch the video they’ve searched for, as well as others in its category); and monetization (viewers have found the video, watched it, and now is the chance to get them to take action, be it by clicking on an ad or related content, or filling out a lead generation form).

While these make an argument for ROI, the winning case for being concerned about metadata is that the money your organization is spending on video production is wasted if one cannot easily search and recall this content. “There is no excuse for not focusing on this aspect of your video strategy right now because the benefits are so obvious and extreme,” Wilde said. “Not having a strategy for video metadata is really under-leveraging the investment you put into your video content. Video content is expensive to produce—it’s like you’re producing it and you’re putting it in the closet where no one can find it and you can’t earn any money for it.”

And, chances are, that storage closet is costing you money as well.

Smart Storage

For tech managers, RAID (Redundant Array of Independent Disks) should be part of every organization’s video strategy, according to Andy Howard, founder and managing director at Howard & Associates. “You don’t ever want to have a single disk go down and you lose all the content that’s on that disk, because disks go bad,” he said. With RAID, the content is spread across multiple disks so that if one does fail, the content is safe and accessible from those disks that are operational.

Howard also advises tech managers to consider establishing data center redundancy for video content. “If you have two data centers where your management software is located, either duplicate the content in those data centers or create a cluster where the content is spread over those two data centers,” he explained. He also notes that it’s useful to examine which video content will inevitably go stale—a CEO ’s year-end broadcast may not be relevant 18 months after it was broadcast, for example. “You need to think about how expensive storage is, and how some of this [less-relevant] content can be archived,” while still made available in case someone does want to reference it down the road.

Finding the Silver Lining

One of the big decisions involved in video management is whether to host the software on-premise, or subscribe to a SaaS provider. Zohar Babin, senior director of community and ecosystem partnerships at video management platform developer Kaltura Inc. in New York, N.Y., reminds tech managers that the choice doesn’t have to be so black and white.

“You may not want to have it on a third-party cloud, but you may actually have a good relationship with a larger vendor [such as Amazon], so you make sure that the platform of choice can run on those systems,” he illustrated. Or, tech managers may opt for a hybrid scenario, where the actual content is stored locally, but the metadata is stored in the cloud, perhaps in the video management provider’s cloud. “Instead of managing software, all you need to do it provide storage capacity somewhere.”

Carolyn Heinze is a freelance writer/editor.

Info

HOWARD & ASSOCIATES

www.howard-associates.com

KALTURA

www.kaltura.com

RAMP

www.ramp.com

WOWZA MEDIA SYSTEMS

www.wowza.com

Carolyn Heinze has covered everything from AV/IT and business to cowboys and cowgirls ... and the horses they love. She was the Paris contributing editor for the pan-European site Running in Heels, providing news and views on fashion, culture, and the arts for her column, “France in Your Pants.” She has also contributed critiques of foreign cinema and French politics for the politico-literary site, The New Vulgate.