Viewers today are used to high compression ratios and artifacts from wireless and mobile video (H.264/AVC or H.265/HEVC). But there is a threshold where the experience is so poor they stop watching and the resulting loss of revenue can be quite significant: a 2013 study* predicted global content brands would lose $20 billion by 2017 due to poor video quality. Poor-quality video means a high volume of help desk tickets. Identifying different types of compression artifacts and understanding when and where they occur, can help greatly when troubleshooting.

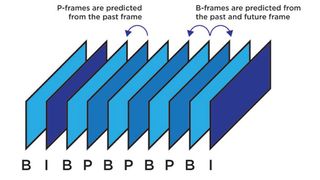

Artifacts are either location-based (spatial) or time-/sequenced-based (temporal). Spatial artifacts are commonly seen when the video is paused, while temporal artifacts can only be seen when video is actively playing. Intraframe-based compression algorithms like M-JPEG are less susceptible to temporal artifacts since I-frames are single image encodings, while P-frames and B-frames (interframe types) hold only part of the image information. Interframe algorithms typically show improved video compression rates, but at the expense of propagating compression losses to subsequent frame predictions which often creates temporal artifacts. Objective evaluation of temporal artifacts is more challenging, though, and popular video quality assessment models often fail to account for them.

Spatial Artifacts

● Basis Pattern: Taking its name from basis functions (mathematical transforms) endemic to all compression algorithms, it usually occurs in regions like trees, fields of grass, waves, etc. Typically, if viewers notice a basis pattern, it has a strong negative impact on perceived video quality.

● Blocking: Blocking occurs whenever a compressed image is streamed over a low bandwidth connection. When decompressed, the output of certain decoded blocks makes surrounding pixels appear as larger blocks. It’s usually more visible with larger display, assuming resolution remains the same. An increase in resolution makes blocking artifacts smaller in terms of image size and therefore less visible.

● Blurring: Colloquially referred to as “fuzziness” or “unsharpness,” blurring is a result of loss of high spatial frequency image detail, typically at sharp edges. It makes discrete objects — as opposed to the entire video — appear out of focus.

● Color Bleeding: Color bleeding occurs when the edges of one color in the image unintentionally bleeds or overlaps into another color. Assuming the source video wasn’t oversaturated, this artifact is caused by low chroma subsampling.

● Ringing: Also known as “echoing” or “ghosting,” ringing takes the form of a halo, band, or ghost near sharp edges and doesn’t move around frame to frame. It occurs during decompression because there’s insufficient data to form a sharp edge. Mathematically, this causes both over- and undershooting to occur and introduces the halo effect.

● Staircase noise: This is a special case of blocking that appears as stair steps along a diagonal or curved edge. Staircasing can be categorized as a compression artifact (insufficient sampling rates) or a scaler artifact (spatial resolution is too low).

Temporal Artifacts

● Flickering: This generally refers to frequent luminance or chrominance changes over time. Fine-grain flickering is typically seen in slow-motion sequences with large motion or texture details that make it appear to be flashing at high frequency. Coarse-granularity flickering is the sudden luminance changes in large areas of the video. This is most likely caused by the use of group-of-picture structures in the compression algorithm.

● Floating: Caused by the encoder erroneously skipping predictive frames, floating refers to the illusory motion in certain regions while the surrounding areas remain static. Texture floating occurs in large areas of texture, like surfaces of water or trees, while edge floating relates to the boundaries of large texture areas, such as a shoreline.

● Jerkiness: Although not considered a true compression artifact, jerkiness or “judder”, is the perceived uneven or wobbly motion due to frame sampling. It’s often caused by the conversion of 24 fps movies to a 30 fps or 60 fps video format, known as "3:2 pulldown" or "2:3 pulldown.” It can’t create a flawless copy of the original movie because 24 does not divide evenly into 30 or 60. Judder appears less at higher frame rates because the motion of objects is reduced between frames.

● Mosquito noise: Also known as “edge busyness,” it resembles a mosquito flying around. A variant of flickering, it’s typified as haziness and/or shimmering around high-frequency content (sharp transitions between foreground entities and the background or hard edges), and can sometimes be mistaken for ringing.

Learning these video artifacts enables AV managers to identify trouble spots in the transmission pipeline, resulting in improved viewer experiences and fewer help desk tickets.

*Source: Conviva, Viewer Experience Report, 2013.

John Urban is a product marketing manager at Biamp Systems.